ADO.NET has a strong and powerful disconnected model.

It allows programmers to build a web of in-memory objects and relate contents coming from different tables and even from different data sources. When inter-related tables are involved with the process of query and update, code strategies are important to preserve scalability and maintain high performance. Sometimes compound queries can be more effectively accomplished splitting queries; sometimes not. Submitting changes in batch mode, exploiting the DataSet and its disconnected model, often appears as the perfect solution. However, what if you need to move dozens of MB? The serialization mechanism of the DataSet would increase that by a factor. Tradeoffs is the magic word.

All data-driven applications look alike. They are built around a few basic tasks?fetch data across different tables, join columns, and filter data based on some user-specific criteria. Data-driven applications must also format data in such a way that end-users can easily and pleasantly consume it. More often than not, data is made of cross-related tables in which parent/child relationships are in place to define the data model and to extract a specific subset of information like detail views and subtotals.

ADO.NET provides you with the tools to configure the runtime environment so that changes can automatically be cascaded from parent to child rows.

So can we conclude that all applications that deal with data are the same and can be built using a common pattern? Of course not. Other aspects, including scalability and performance, affect and influence the design.

All distributed applications must be scalable to some extent. If a Web application is made open to anonymous users?for example, a shopping site?providing a good, if not optimal, level of scalability is a sort of survival test. Scalability is related to performance, but it can't be identified exclusively with that. Likewise, performance affects scalability but in no case are they synonyms.

In general, caching data does not seem to be an optimal choice for all Web applications because it consumes valuable resources on a per-user or per-application basis. As a result, having less memory available to the Web server might lead to performance degradation. On the other hand, if you're developing an application that needs to be both fast and scalable, you should carefully consider caching data at some level to reduce the workload on middle-tier components and the bottleneck of database connections. The more tiered your system is, the more options you have to scale it out as long as you can move disconnected caches of data across the tiers.

In the first part of this article, I examined a few recommended approaches for connections and transactions management in ADO.NET applications. In this second installment, I'll tackle SQL-level programming and review a few scenarios in which the quality and the structure of SQL statements can make a world of difference between applications.

Untangle the Database Ball of Wool

Even the simplest Web application can have a rather complex and interrelated structure of databases. While a database with dozens of related tables, views, constraints, and triggers is perhaps not what you deal with every day, I've never seen a real-world application with less than a handful of related tables.

The problem with a grid of databases is with both queries and updates. End users should be able to access and filter related rows, but also to update and delete rows in a way that does not violate the consistency and integrity of the database.

Some databases resort to server-side constraints to block undesired changes. The fact that most database administrators use this strategy to stay on the safe side is not a good reason to avoid code that could potentially corrupt the database. Ideally, client applications should submit changes that respect the database structure and its internal update rules (for example, relationships and constraints).

ADO.NET has a strong and powerful disconnected model that allows applications to work with sort of in-memory databases. The DataSet object governs the storage of multiple tables and it enables filtering, sorting, constraints, and cascading changes. This model, though, seems to fit more easily for updates than for queries. For compound queries, the hurdle is the way in which an SQL result set is adapted to fit into a DataSet and whether cached data is effective to the application. Let's review a couple of solutions for compound queries.

Conducting Multiple Queries

In light of the ADO.NET capabilities, you can implement multiple queries that span across multiple tables in at least two ways. The old-fashioned way, so to speak, is typical of ADO and any former data-access technology. It is based on the old faithful SQL JOIN command and returns a single, monolithic data structure that is quite easy to filter, but hard to update. In addition, the final result set is populated with redundant data.

A data adapter that is about to update a row during a batch update process fires the RowUpdating and RowUpdated events, respectively, before and after the update.

The alternative approach, touted by the ADO.NET disconnected model, exploits the capabilities of the DataSet object?that is, a multi-table cache of data. The idea is that you split the original query into two or more distinct queries and gather the results in separate tables held in memory within the same DataSet object. The queries can be run individually or grouped in a stored procedure or in a batch. Using split queries results in a much more compact data set that can be easily managed via the programming interface of the DataSet and DataRelation objects.

Once the result set is stored into a DataSet, it supports cascading changes and batch updates. Furthermore, no synchronization code is required to retrieve the children of a parent row because automatic relationships can be declared. On the downside, just relationships make applying filters to the records not particularly easy.

Suppose you need to obtain all orders issued to a given country. The query can be written like this:

SELECT c.customerid, c.companyname, c.city,

o.orderid, o.orderdate, od.productid

FROM customers c

INNER JOIN orders o

ON c.customerid=o.customerid

INNER JOIN [order details] od

ON o.orderid=od.orderid

WHERE c.country = 'USA'

ORDER BY o.customerid

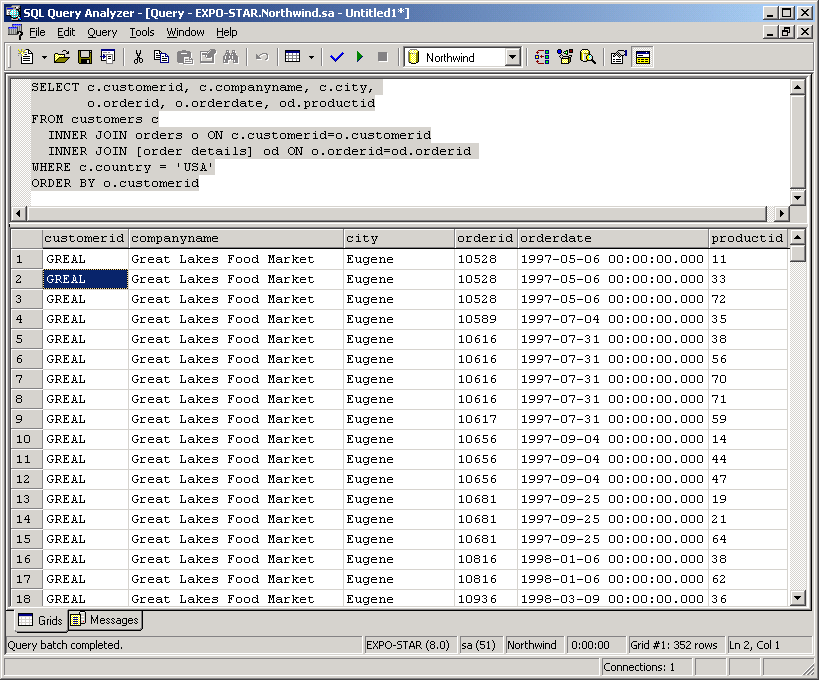

The query spans three Northwind tables?Orders, Order Details, and Customers. It retrieves all orders issued to a customer resident in the US. Viewed through the Microsoft SQL Server Query Analyzer, the result set looks like Figure 1.

You can immediately see how much redundant data the result set contains. The query counts about 300 records and each of them has a high likelihood of containing duplicate customer information.

The main characteristic of a joined query is that it returns data as a monolithic block. This is good news and bad news at the same time. The good news is that data is held in the same block of memory, works great for presentation purposes, and can be further filtered by simply adding some criteria to the object that represents it?either an ADO Recordset or an ADO.NET DataTable.

The bad news is, the result set does not make good use of memory and might raise scalability issues. Also, keeping changes synchronized with the database is not trivial. Finding a match between an element in the result set and a table record is challenging and this makes it hard to keep the data synchronized and even to apply changes. No direct correspondence exists between the result set and physical tables in the database.

With ADO.NET, an alternative approach becomes viable and, for certain types of applications, even particularly attractive. The idea is to separate an otherwise nested and complex query in multiple simpler queries according to the historical motto "divide-et-impera." There's a double advantage to doing so. First, the amount of data returned to the client is significantly smaller. Second, the functionality of the ADO.NET DataSet object makes managing multiple tables straightforward. The query above can be rewritten as follows.

SELECT customerid, companyname, city

FROM customers

WHERE country='USA' ORDER BY customerid

SELECT customerid, orderid, orderdate

FROM orders

WHERE customerid IN

(SELECT customerid FROM customers

WHERE country='USA')

ORDER BY customerid

SELECT orderid, productid

FROM [order details]

WHERE orderid IN

(SELECT orderid FROM orders

WHERE customerid IN

(SELECT customerid FROM customers

WHERE country='USA'))

ORDER BY orderid

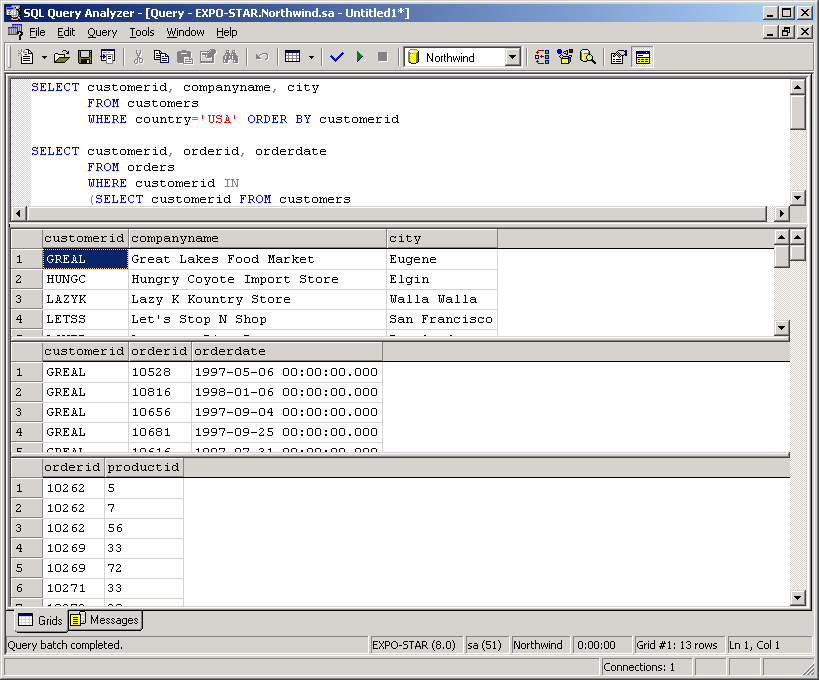

The results, shown in Figure 2, consist of three result sets.

The first query selects all customers who reside in the United States. The second query retrieves all orders (only ID and date) issued by a customer living in the United States. Finally, the third query fetches product ID and other order details from the related table.

In general, using split queries makes any DBMS system return less data than if JOIN statements are employed. However, the claim that you can really do more with less has not been proved true for all possible situations and scenarios yet. Working with multiple queries makes it easier to locate the database record that corresponds to the ADO.NET row. The model works great also if you have to retrieve rows from multiple and heterogeneous data sources. For the multiple query model to work, though, you have to combine ADO.NET tables together using ADO.NET relations. The ADO.NET query engine doesn't automatically create relationships, even if table relationships are defined in the physical database.

It is unquestionable that splitting a complex query into steps tends to move much less data. However, this doesn't necessarily mean that there's no better way. The more accessory and foreign fields you retrieve, the more redundant data you save splitting queries.

Split queries return more records than a compact query but with much less redundancy. More exactly, some redundancy still exists but it is limited to key fields. You can easily verify this by looking at the two figures above. All the queries you obtained can be grouped in a SQL batch or a stored procedure and handed off to a data adapter for actual population. You get back a DataSet with as many tables as there are queries in the statement. You can access and index tables individually and create in-memory relations between tables. Easy? Sure. But effective?

No matter what these quick tests can apparently show, for the DBMS, three queries are heavier than one. However, running three queries instead of just one can bring other advantages to the application. All summed up, these advantages can make split queries a preferable solution.

Putting It All Together

Let's compare the two approaches?old-fashioned joined queries and ADO.NET split queries?a bit more in detail. The bottom line is that neither approach is absolutely preferable in all cases. You should evaluate both approaches carefully, taking into account the concept that nothing is certain about scalability. Databases and distributed applications are so complex and based on so many variables (topology of the network, latency, size and characteristics of the database, number of tiers and their implementation) that the way in which you query is just one of the influencing parameters.

If you want to make some experiments yourself, I suggest that you write two small applications?one to query using a compact sequence of JOIN statements and one that uses split queries. Measure the performance of both applications separately and, of course, in the same context. A common error that I repeatedly observed in quick tests done to create sensation is that the same application is used to test the performance of compact and split queries. If you use just one application, then you first test, say, compact queries and then split queries. In many cases, if you then invert the order in which approaches are test (say, split queries first and then compact query) you get reverse results! Using a single application makes the full test unreliable because the first piece of code suffers from the ADO.NET library load and initialization. As a result, the second scenario tested seems run faster. If you want to make tests, use a real-world context and make tests in the production environment.

One certain fact I can unquestionably state is that distinct queries tend to move much less data (roughly half or even less). The final word, though, can be said only by looking at the actual queries. The more accessory and foreign fields you retrieve, the more redundant data you save splitting queries.

My tests indicate that using relatively simple "individual queries" you get data in a slightly faster way. I'd say about 10% faster. This data is in line with what some ADO.NET books and articles claim. However, for really complex queries (that is, with several nested JOIN, GROUP BY, and WHERE clauses) three queries always proved to be slower than one. Why? In this case, each individual query ends up being quite complex itself.

Finally, if you choose distinct queries, consider that the overall performance must include the client-side performance price of filling the DataSet up and setting up relations. In addition, you have to use a batch statement or a stored procedure for optimal performance. This leads to another subtle, but not less tough, problem?concurrency. A monolithic query gains the inherent transaction support that SQL Server (and, in general, any DBMS system) guarantees to individual commands. A complex query, especially if it includes subqueries or views, is made of different low-level operations that run in the context of the same implicit transaction. This guarantees that no concurrency issues would ever be raised, even for highly volatile databases. A transaction (such as a serializable transaction) must be explicitly set to guarantee consistency in case of concurrent databases and multiple queries.

Splitting Monolithic Queries

Suppose that you are not allowed to use multiple queries in your application. This has nothing to do with a bad opinion you may have of the solution. It is just that your DBA strongly disagrees with any suggested change in the set of stored procedures. You would like to manage data using comfortable ADO.NET objects, but your DBA will only let you employ old-style stored procedures to get them. How can you load the result sets into ADO.NET disconnected objects?

ADO.NET provides you with the tools to configure the runtime environment so that changes can automatically be cascaded from parent to child rows.

Let's consider the following more complex query. It retrieves, grouped by customer, all orders issued in a given year. The query won't retrieve all the orders, though, but only those with at least 30 items. In addition, the name of the employee who processed the order and the name of the company that issued it are inserted into the result set.

SELECT o.CustomerID, od.OrderID, o.OrderDate, o.ShippedDate,

SUM(od.Quantity * od.UnitPrice) AS price,

c.CompanyName, e.LastName

FROM Orders o INNER JOIN

Customers c ON c.CustomerID=o.CustomerID INNER JOIN

Employees e ON e.EmployeeID=o.EmployeeID INNER JOIN

[Order Details] od ON o.OrderID=od.OrderID AND o.OrderID=od.OrderID

WHERE (YEAR(o.OrderDate) = 1997)

GROUP BY o.CustomerID, c.CompanyName, od.OrderID,

o.OrderDate, o.ShippedDate, e.LastName

HAVING (SUM(od.Quantity) > 30)

ORDER BY o.CustomerID, od.orderid

Listing 1 demonstrates how to split the results of the query above. The main procedure?SplitData?gets the DataSet originated by the query and processes it. The input DataSet contains just one table.

The method in Listing 1 makes a copy of the DataSet and then creates two new tables?Employees and Customers. The SplitData method fills these two tables up using data from the monolithic table. Finally, the method obtains the third table?Order Details?and removes processed columns from the original table. As a result, you run a traditional stored procedure, pay the price of redundant data being moved across the wire, but gain in flexibility because you can handle disconnected data in distinct and possibly related tables. What's the big advantage of this approach? You can now make batch updates.

Conducting Cross-Table Updates

When it comes to updating related tables, the order in which your program executes each constituent statement is critical. For example, you can't delete an order without first deleting all of its details. Likewise, you aren't normally allowed to add an invoice detail row without first adding its parent row.

When related tables are involved, changes flow from the parent to the children in different ways, depending on the type of the change you're attempting?update, delete, or insert.

In general, there are two ways of conducting table updates?through a direct connection or batch update. In the case of a direct connection, you typically open a connection and execute the stored procedure or the SQL batch with all the logic and data you need to apply. In the case of a batch update, you typically yield to a middle-tier component, which gets disconnected data from you and takes care of submitting data to the database, possibly in an asynchronous way.

The disconnected approach in a Web scenario requires you to serialize the data that you want to submit from the Web server layer down to an internal tier, which holds a physical connection to the database. The availability of an easy-to-serialize object such as the DataSet and the powerful batch update mechanism of data adapters give you the tools to build highly scalable applications.

The batch update process passes all in-memory changes recorded in a DataSet table to the back-end system for permanent storage. In ADO.NET, you don't submit your blocks of data being sent to the database in a single shot. Your ADO.NET batch update will execute individual statements on the target system, one for each change that needs to be submitted. Batch update opens one connection but still performs multiple statements in a predefined order.

To run a batch update, you don't have to do much more than call the Update method of the data adapter class. This simple mechanism, though, won't work at all with inter-related, hierarchical data. When you deal with hierarchical data, you need client-side cascading changes and custom update logic to build effective applications.

ADO.NET supports two types of constraints?unique and foreign-key constraints. A unique constraint is a restriction on a data column in which all values must be unique. A foreign-key constraint set on a DataTable restricts the action performed when you try to delete or update a value in a column. For example, deleting a value from the parent table can affect rows in the child table in various ways. Often you want to delete child rows in the child table. However, in some cases, you'll have a cascading action set the child rows to null or default values. You can programmatically set the action that works best for using the programming interface of the ForeignKeyConstraint class.

What really matters, though, is that ADO.NET provides you with the tools to configure the runtime environment to automatically cascade changes from parent to child rows. Such a mechanism allows you to work on a DataSet object, enter changes, and (if needed), have them propagated through the tree. When you have gone through all the changes, ADO.NET guarantees that all the tables in the DataSet have been properly updated. If a change cannot be cascaded, or simply contains invalid values that would violate any of the relationships, an exception would be thrown.

After ADO.NET has updated your tables, you just take the resulting DataSet and apply the logic that best suits your updates. For example, depending on the relationships set between your tables, you may need to process child deleted rows before parent insertions and child updates after parent updates. You can easily extract the subset of rows to process using the Select method on the DataTable object. Whatever the right order is to enter changes to your application, you have the tools to get ready-to-process rows.

Retrieving Server-Generated Values

More often than not, when you make updates across tables, you need a previously generated value to successfully run the next statement within a stored procedure. Such a typical situation might involve triggers or identity columns. If you're using direct commands or stored procedures, there's not much that ADO.NET does for you. You must address the issue yourself. One common workaround entails reading critical values back through a subsequent query. You can return the values you need through output parameters or additional result sets. This solution works but you have to code it yourself. If you use the ADO.NET batch update instead, you can rely on a couple of built-in mechanisms that save you from extra coding. The first mechanism is based on the UpdatedRowSource property of the command object. The other mechanism leverages the RowUpdated event on the data adapter object.

The UpdatedRowSource property looks for output parameters, the first row of the next query, both, or neither. By default, it looks for both. The following SQL batch inserts a new row into a database where custID is an identity column. The second statement retrieves the last identity value generated in the scope and returns it as the updated value of the custID column.

INSERT INTO customers (custID, custName) VALUES (@CustID, @CustName)

SELECT SCOPE_IDENTITY() AS custID

If you assign the above statements to the InsertCommand property of a data adapter, the UpdatedRowSource property will catch the newly generated identity value and pass it to the DataRow object being updated. If you set the UpdatedRowSource property to Both (the default) or FirstReturnedRecord, each inserted row processed through the batch update will have the identity column updated. And, more importantly, you don't have to write any additional code. You only need to select a checkbox in the Visual Studio .NET wizard that configures a data adapter.

A data adapter that is about to update a row during a batch update process fires the RowUpdating and RowUpdated events, respectively, before and after the update. You handle the RowUpdated event as follows:

adapter.RowUpdated += new

SqlRowUpdatedEventHandler(OnRowUpdated);

The event handler gets a data structure of type SqlRowUpdatedEventArgs in which the Row property returns the row as updated by the underlying database. You'll need to modify this code database. You must design effective queries and update strategies to make a scalable application. To write effective SQL code, you should know the SQL engine you're using, and realize that they may not be equal. If you're building a .NET application, you also need a good understanding of the ADO.NET model and its capabilities. ADO.NET is powerful but it doesn't reduce database programming to mere point-and-click programming. ADO.NET offers a set of powerful tools but without practice and experience you may not do a lot with them. The command builder object or even the batch update process, for example, greatly simplifies the update process but you must know what they do and how they work.