Suppose you must maintain the code base of a software product that incorporates more than 15 years of legacy support. The capabilities of the programming language evolved over time, new design ideas came up, insights in the domain emerged, but product owners and management decided to skip necessary refactoring of the code base due to time pressure. This sounds familiar to many developers and some may already start losing their hair from just thinking about it. The reason for the hair loss is obvious, but remains unvalued by management and product owners: Whenever a code base grows for a long period of time, the design of the code slowly turns into the famous big-ball-of-mud, a nightmare for all of us. Even worse, when developers suddenly need to deliver a new application fast, they inevitably fail, because most of the code can't be reused somewhere else.

In this article. I'll show you one way out of the misery. It doesn't matter whether you start on a green field, or you already live the nightmare: I cover both scenarios. With a green field, you can immediately start out in the right way. For the ones living the nightmare, I present a migration process to slowly turn the big-ball-of-mud into something clean and useful, which can serve as a framework for various software products (which I refer to as software product line framework here).

The common ground for both scenarios builds around two key ingredients: the well-known architectural style “ports-and-adapters,” sometimes also referred to as “hexagonal architecture;” and design patterns and principles from domain-driven design (DDD). The former ingredient tells you how to structure an application in general, and importantly, it commands you to write business logic completely independent of the environment in which the software runs. The latter ingredient gives you a hint where to split the business logic into (almost) independent assemblies. Combining these ingredients provides a very appealing dish using .NET 5: a software product-line framework of business logic assemblies for multiple platforms.

In the last part, I'll present a migration process that allows you to slowly migrate there. For that purpose, I outline how to deal and integrate with the legacy code base for new business logic, but also how to migrate existing business logic into a multi-platform software product line framework.

The Common Ground

Before you prepare the dish, you need to know the ingredients: ports-and-adapters as an architectural style, and domain-driven design for creating valuable software. Let's get to know them.

Ingredient 1: Ports-and-Adapters

There are many different architectural styles from which architects and developers may choose, each with advantages and disadvantages. Which one to choose depends on several factors, like target platform, performance, etc. But also, the business domain that the software needs to support influences the choice of style. For example, for software that controls a manufacturing pipeline, a pipes-and-filters design appears more appropriate than a data-centric approach. However, when the goal is to create software that needs to potentially run in multiple environments, the ports-and-adapters style fits perfectly. But why? What's so special about it?

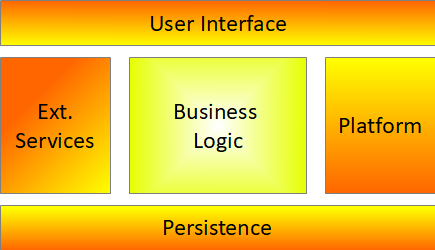

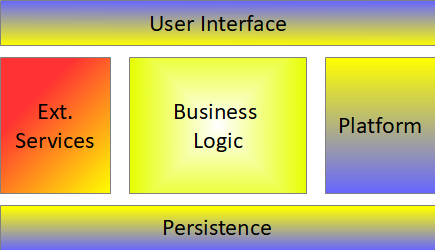

Figure 1 depicts the ports-and-adapters architectural style. The business logic lies at the core of the application and the environment surrounds it. This sounds great for software that needs to run in many different environments, like for example on a mobile platform or on a desktop.

A re-implementation of the environment enables you to reuse the same business logic to build a new application, maybe even on a mobile device, as shown in Figure 2.

How does it work in detail? What do you really have to do to use the full strength of this ingredient?

Before exploring that, I need to explain the main idea of ports and adapters: The business logic component drives the application because it delivers value to the business, not the other way around. Hence the business logic needs to be independent of the environment in which it runs, for example, the application runtime, the platform, etc. Only this turns the business logic into a reusable component. The business logic needs to interact with the environment at some points, like, for example, to persist data or to communicate with an external service. To accomplish that, the business logic defines ports, which abstract such interactions with the environments. Specifically, every time the business logic needs to interact with the environment, the business logic component declares a C# interface for that interaction. You call an implementation of such an interface an adapter and implementing all of them makes up the application (except the user interface interactions with the business logic). This principle, also known as dependency-inversion principle (the “D” of the SOLID principles), enables you to write business logic free from concrete implementations of any environment.

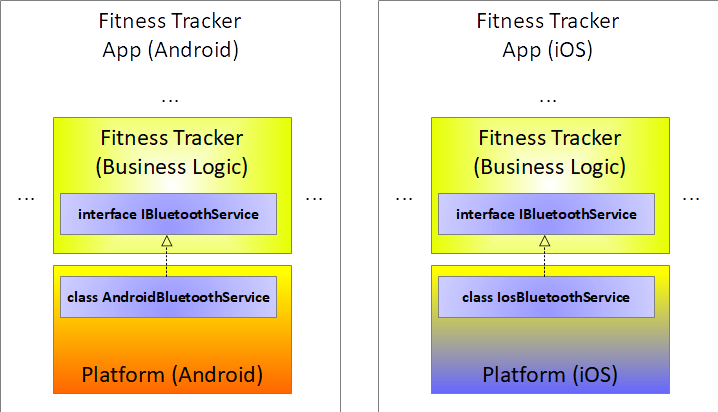

Let's take a close look at how it works in detail. Suppose you must write an app for Android and iOS using Xamarin that needs to communicate with a fitness tracker peripheral device via Bluetooth. The business logic for the “FitnessTracker” app should get re-used on both platforms to minimize implementation efforts. Hence, the development team defines in the business logic assembly an interface IBluetoothService to get the latest fitness data from the fitness tracker device:

interface IBluetoothService

{

IList<RawFitnessData> GetFitnessData();

}

In the respective platform component, they implement that interface in a platform-dependent way to cope with the platform needs regarding Bluetooth connections. See Figure 3 for details.

The business logic assembly of the Fitness Tracker app defines an interface for reading the fitness data from the external Bluetooth device, referred to as “peripheral” from now on. The respective platform-dependent assemblies implement this interface to deal with the specialties of each platform regarding Bluetooth.

Ingredient 2: Domain-Driven Design

Ports-and-adapters architectures isolate the business logic of an application. How should you now implement this component? How do you structure it? Should you create one component covering the whole business logic? Many questions arise and luckily, I have an answer for them: Domain-driven design, also known as DDD.

For modeling and structuring business logic components, domain-driven design is a standard process. It pursues a very close collaboration between software developers and domain experts. One goal governs this close collaboration: aligning the software design or software model with the model of the business domain the software supports. This sounds somehow easy and clear, nevertheless, many of us (including myself) failed at this task.

The reason: We are developers, not domain experts! Obvious, isn't it? However, if the goal is to align the software model with the business domain, then we must talk a lot to the domain expert to grasp his knowledge and reflect it in our design. For that purpose, DDD suggests that developers and domain experts first agree on a shared language, referred to as ubiquitous language. Both use only the terms from this language when discussing the business domain, to prevent misunderstandings from the beginning. To transform such discussions now into a meaningful model, DDD provides a rich tool set of tactical and strategic patterns.

The tactical patterns help you to come up with a software design, also referred to as domain model, which captures the relationships of objects within the business domain. Because not all objects within the business domain are of the same kind, the tactical patterns feature several different types of model elements, including (but not limited to):

- Entities characterize objects with an identity throughout their life cycle.

- Value-objects are objects without such an identity, where only the attributes of the object matter.

- Aggregates characterize object graphs that appear as a single object. In general, they build around invariants.

- Repositories correspond to objects that persist and query aggregates.

These types help you to design the domain model.

Too abstract? Let's try it out on a concrete example. Consider again the Fitness Tracker app from before. The domain expert, a running professional, gives us the following insights: “Each run depends on my condition on that day, so two runs are never the same for me. When I go for a run, I want to see how long I need for each individual lap I run. An individual lap is at most a quarter mile long. Sometimes I want to decide on my own when the lap ends. Further, I would like to visualize my heart rate after the run.” Of course, I'm simplifying here for the sake of clarity. A real-world model would be much more complex.

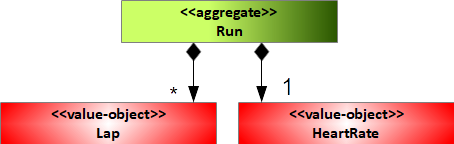

Anyway, from the conversation with the running professional, I infer that there should be something like a Run object within the domain model. Further, I identify individual laps, so a Lap object wouldn't hurt. The length of such a lap is up to the runner, but at most, a quarter mile. Finally, the running professional wants to visualize his heart rate afterwards, therefore I add a HeartRate object to the domain model. Which type do you associate to each of these objects and how do they relate?

The Lap and HeartRate objects correspond to value-objects because they have no meaningful identity to the runner. They only make sense in the context of a specific run. However, the Run object has an identity to the runner (“Each run highly depends on my condition on that day”). Importantly, Run implicitly also defines two invariants:

- There is at least one Lap for each run (“When I go for a run, I need to see how long I need for each individual lap I run”).

- Each run comes with a HeartRate object (“Further, I would like to visualize my heart rate after the run”).

Therefore, the Run object turns out as an aggregate. For the full domain model, see Figure 4.

The strategic-patterns in domain-driven design tell you to which part of a domain a concrete domain model applies. In most cases, the business domain breaks down into smaller units, or sub-domains. Such individual sub-domains address a certain area of the whole business, and the objects within them form a highly cohesive part with very few relationships to other sub-domains. Very often, sub-domains emerge from organizational structures or individual areas of a business. DDD defines the notion of a so-called bonded context for such sub-domains, which ultimately contain the business logic to support them in software. Therefore, the whole business logic of an application designed using DDD comprises several loosely coupled bounded context components.

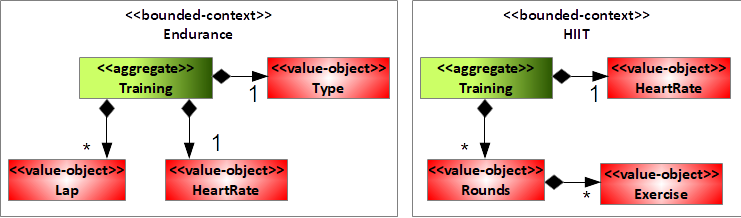

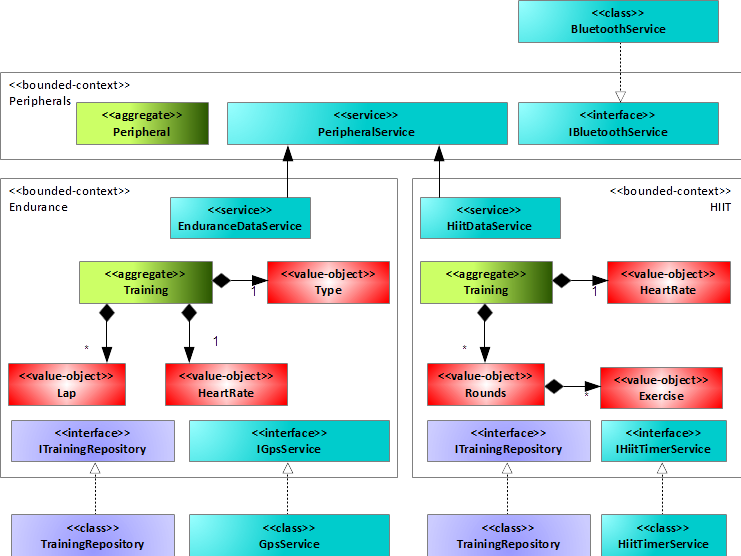

Let's work this concept out using the Fitness Tracker app. The product owner decides that the app in the next release should additionally support HIIT (high intensity interval training) workouts and biking. After several rounds of discussions with the domain-experts, the software developers conclude that the business domain breaks down into two domains: HIIT and Endurance (running and biking have a lot in common in this simplified example). Each of them comes with a domain model, and the models remain independent. Figure 5 depicts the result.

This concept now serves as starting point for your software product line framework.

Let's Cook It Up and Prepare the Dish!

Ports-and-adapters and domain-driven design provide the main ingredients to the dish: a software product line framework for multiple platforms. How? Here is the recipe:

- Indentify your business domain and break it down into smaller sub-domains.

- Come up with a domain model for each of the sub-domains, leading to a bonded context.

- Every time the domain model needs to interact with the environment, create a port for it.

- Implement to domain model of each bounded context in a platform-independent assembly.

The first step of this recipe defines the scope of the whole software product line. It declares which sub-domains you'll address with your framework. Step two establishes on the one hand a design for each individual sub-domain, and on the other hand it settles the implementation boundaries in terms of assemblies. Hence, the second step sets the granularity of your software product line framework. The third step in the recipe turns your software product line framework into a real multi-platform dish because it eliminates all environment dependencies from your bounded contexts. Finally, the implementation of step four provides you with a set of reusable assemblies for multiple platforms.

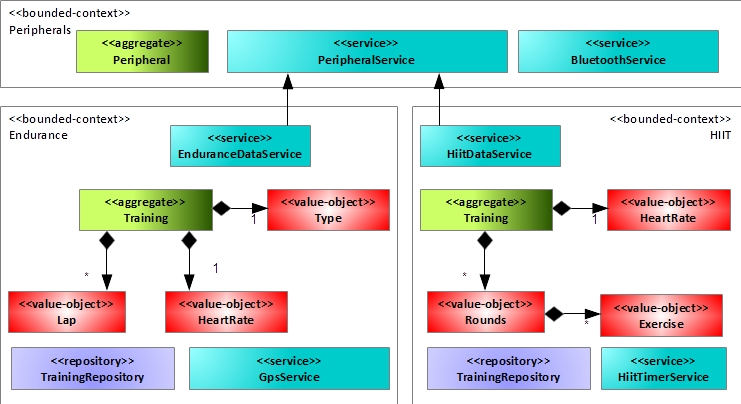

Let's try the recipe together with the Fitness Tracker App from before. You start by adding repositories to the bounded contexts, because the app should store the fitness data somewhere. Recall that the app reads the fitness data from a peripheral Bluetooth device, as Figure 3 indicates with the IBluetoothService interface. You further add in the Endurance bounded context a service to read GPS data from the mobile phone to track the activity (unfortunately there's no GPS tracker included in the peripheral device), and you add a so-called HIIT timer service to the HIIT bounded context. For the communication with the fitness tracker peripheral, you create a new bounded context, the Peripherals bounded context. This bounded context supports the proprietary protocols and data formats that the fitness tracker peripheral implements, independent of the communication interface like Bluetooth. Observe that the data services of the Endurance and HIIT bounded contexts use the PeripheralService from the Peripherals bounded context to read data from the peripheral. Figure 6 summarizes the new situation.

Figure 6 is the output of step two of the recipe, providing you with domain models for the bounded contexts Endurance, HIIT, and Peripherals. It leads to an interesting insight: Endurance and HIIT bounded context both depend on the Peripherals bounded context. That means, whenever you want to use either of them, you need to include the Peripherals bounded context. However, this design is a (not yet multi-platform) software product line framework! But why?

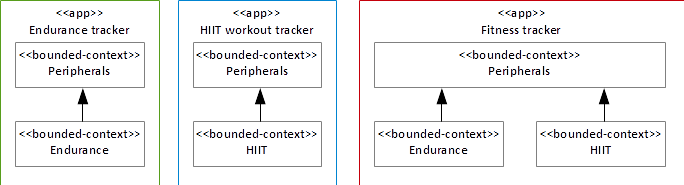

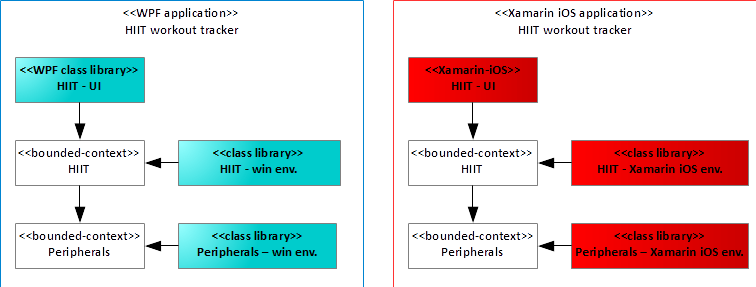

The design of individual bounded contexts with very few relationships among each other allows you to modular assemble new applications from them. You build new applications by simply including only the bounded contexts (and their dependents) you want to deliver in the new application instead of all of them. For instance, if the business decides to deliver a small app just including endurance training, then the app comprises the Endurance and Peripherals bounded context (“Endurance tracker app”). Or, if the business decides to deliver another app just supporting HIIT workouts, then it comprises the HIIT and Peripherals bounded context (“HIIT workout tracker app”). Finally, the premium version could feature all bounded contexts (“Fitness tracker app”). Figure 7 shows the different configurations of the framework into concrete software products.

To turn the framework now into a multi-platform dish, you need to apply step three of the recipe. As Figure 6 shows, the bounded contexts interact at several points with the environment. Specifically:

- Endurance bounded context: TrainingRepository, GpsService

- HIIT bounded context: TrainingRepository, HiitTimerService

- Peripherals bounded context: BluetoothService

The TrainingRepository classes depend on the storage technology the app uses, whereas the classes GpsService, HiitTimer, and BluetoothService depend on the platform the app runs on. Therefore, if you want to turn the framework platform-independent, you must push the implementations of all the repositories and all the services to the environment. This means turning the afore-mentioned repository implementations and service implementations into interfaces and implementing them as adapters outside, which provides a multi-platform software product line framework, see Figure 8.

The final step in the recipe is rather trivial because the bounded contexts are prepared to be platform independent. You simply choose a platform-independent framework like .NET Standard as your target framework.

Why Is This Now So Useful? Select a Restaurant and Eat!

You may wonder “What's the purpose of doing this? Why is it really useful for me?” In the following, I again pick up the food metaphor to detail the advantages for you.

I use the following starting point: You transformed the whole business logic into a multi-platform software product line framework thanks to the use of the .NET Standard TFM (target framework moniker) for the bounded context assemblies. From them, you can build multiple applications depending on your needs. What's so powerful about it is that you're even free to choose the platform of the application, which corresponds to the environment in which the application runs. It's like choosing the restaurant in which you want to enjoy our dish.

Let's be more precise about it and consider the Fitness Tracker app again. Suppose you must deliver the HIIT feature to mobile phones, but also Windows desktop users. The bounded contexts you need to include in the applications are HIIT and Peripherals, both available as .NET Standard assemblies. Apart from that, you only need to develop the user interface and the environment for these bounded contexts, and you ship the new applications to the customers. What's more, whenever you identify a bug in one of the bounded contexts, it requires only a single fix. Figure 9 summarizes the situation.

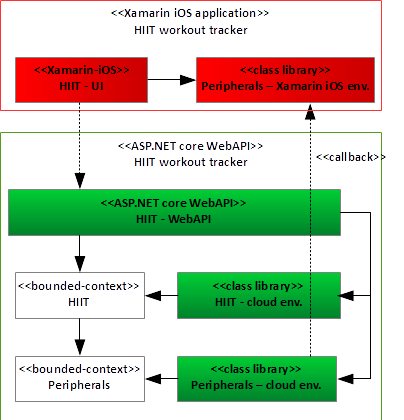

But there's even more. You apply the same idea to turn the Fitness tracker app into a cloud product. It's amazingly straightforward: You replace the “HIIT–UI” assemblies of Figure 9 with ASP.NET Core assemblies full of Controllers, which delegate incoming calls to the bounded context HIIT. Further, you implement the environment of your bounded contexts using ASP.NET core, thereby providing the missing functionality. This transformation, i.e., pushing the business logic of an app into the cloud, leads to an interesting architectural insight: Front-end clients don't need much business logic, because most of it runs in the cloud. Clients solely implement user interfaces, plus some residual interaction logic with the cloud. Figure 10 shows the basic idea.

As you can see in Figure 10, you should introduce a callback mechanism using for example gRPC to remotely perform actions on the client device, especially at points where your bounded context implementation requires a client interaction with real hardware.

That's powerful, and offers you several advantages:

- Clients get slim, carrying less business logic.

- Changes happen centralized in the cloud.

- Changes are more easily tractable.

- Composition of new client applications is easy.

How to Migrate Business Logic to Such a Framework

Now comes the tricky bit. Most of us don't have a green field. We must deal with an old code base, probably grown over years. Very often the code base involves even different technologies, like WPF mixed with WinForms, WCF communication channels with REST APIs, etc. What should you do to get to a multi-platform software product line framework? In the following, I outline a strategy to slowly migrate to a clean solution, also considering the latest .NET 5 development. But before that, I give a short recap about important features of .NET 5.

First, .NET 5 is, in terms of target platform, somehow the successor of .NET Core. It unifies the view for developers onto the .NET Framework, .NET Standard, and .NET Core apps. However, when you need to write assemblies that require platform dependent code like WPF for Windows Desktop, you add support for these functionalities by specifying .net5.0-{platform} as TFM. Second, .NET 5 supports C# 9.0, which comes with a lot of interesting new language features like Records or Relational Pattern Matching. Third, C# source generators seem an exciting new compiler feature.

At its heart, your software supports a business. Therefore, you value your business logic the most. For your migration out of the nightmare, you must now tackle two situations:

- Writing new business logic

- Migrating existing business logic

Writing New Business Logic

When writing new bounded contexts, you start from scratch. That's great, because you apply the patterns and principles from domain-driven design to get to a clean model that you consequently implement in .NET Standard. This enables multi-platform bounded contexts implementations, clean and reusable, as shown before. To integrate with your existing code base, you need an additional technique from domain-driven design known as anti-corruption layers.

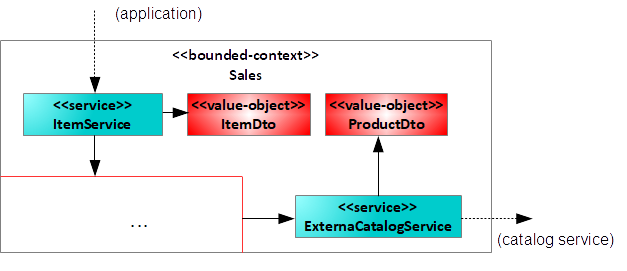

Anti-corruption layers shield bounded contexts from legacy systems. They equip them with a protection shell to decouple the domain model from the legacy model. The responsibility of such a layer is simple: Provide a well-defined view on the new bounded context (inbound facade) and offer a well-defined view onto the legacy system (outbound facade). This also includes defining data-transfer objects between new bounded context and legacy systems to achieve model independence. The facades convert between the models in terms of data-transfer objects.

Consider, for example, that the product owner of the Fitness tracker app now also wants to sell products directly in the application. For that purpose, he explains how the sub-domain “Sales” works and explains that he has an old item catalog system (which acts as an inventory) in place that he intends to use as inventory for all products. Hence, the new bounded context needs to communicate with this legacy third-party system to populate a list of available products to the user. To protect the new domain model from any form of influence, I create two services with corresponding data-transfer objects to protect the new sales domain model. See Figure 11.

Later, I'll split the bounded-context implementation into two parts: one part containing the anti-corruption layer and one part containing the implementation of the domain model. This originates from the following idea: The anti-corruption layer acts as some form of front-end and back-end at the same time because it protects the domain model from the outside world (incoming/outgoing). It enables you to evolve the domain model itself without breaking clients consuming it, but also to change it without worrying about services that it relies on. Usually, such layers contain very little code, which makes the assemblies rather small.

Migrating Existing Business Logic

The situation is way more involved when dealing with existing business logic that lives somewhere within the big-ball-of-mud. I can also provide you a way out for such scenarios. This way involves the following steps:

- Identify which classes implement business logic for bounded context you want to migrate to.

- Define a domain model for the bounded context that you want to migrate (including the abstractions for the environment).

- Define an anti-corruption layer for your new bounded context.

- Migrate to an anemic domain model.

- Migrate to a rich domain model.

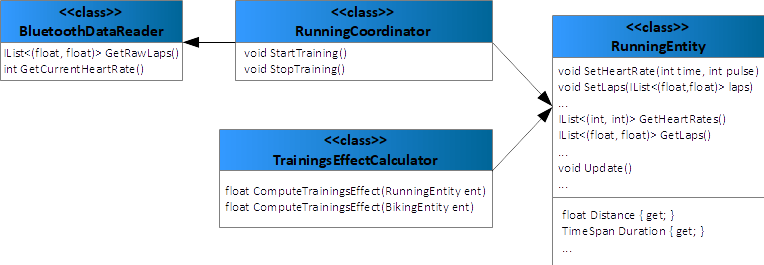

Let me explain the five steps with an example from the Fitness Tracker app to you in more depth. Consider the design of Figure 12. I highly simplify the situation for the sake of clarity.

The design contains the following classes:

- RunningCoordinator: This class starts and stops the run. On starting the run, it creates a new instance of RunningEntity, and then polls the peripheral, in a background thread via Bluetooth, for information regarding laps and the current heart rate. It then writes these values into the RunningEntity.

- RunningEntity: This class stores the heart rate and the laps. It also provides the properties the user cares about to the user interface: distance and duration of the run. It also contains methods for updating the RunningEntity instance in database.

- TrainingEffectCalculator: This class determines the training effect of the run afterwards. For that purpose, it gets the heart rate and the laps of the run to determine an overall training effect from it.

- BluetoothDataReader: This class reads the raw information about a run from the peripheral device.

The classes RunningEntity and TrainingEffectCalculator implement the business logic in this example. However, the business logic comes in a bad condition. Additionally, the RunningEntity class contains persistence code within the Update method that you need to extract.

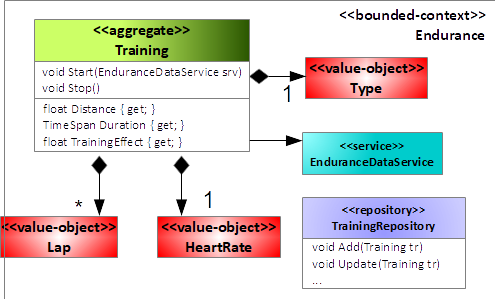

After several discussions with the domain expert, you come up with a new domain model for the “Endurance” sub-domain, which Figure 13 depicts.

The responsibilities of each model element are now clearly defined:

- Training: The application services interact with this aggregate. It offers only two methods: One to start the training and one to stop the training. On executing the Start method, this class creates a new background thread to read the raw values from the EnduranceDataService, which abstracts the peripheral from the “Endurance” sub-domain. Furthermore, the computation of the training effect depends on the children of the Training aggregate only, and, therefore, it also moves to this aggregate. Finally, the logic that the bounded context requires to store, delete, or query for Training moves to the TrainingRepository.

- Lap, HeartRate, Type: These value-objects define some of the properties of a training. To interact with these children of Training, services always need to pass through the aggregate Training and the interface it offers.

- EnduranceDataService: Abstraction of the peripheral with respect to the “Endurance” sub-domain. It makes sure that the domain model of the “Endurance” sub-domain does not explicitly depend on concrete peripherals.

- TrainingReposiotry: Like EnduranceDataService. It abstracts the persistence technology with respect to the “Endurance” sub-domain.

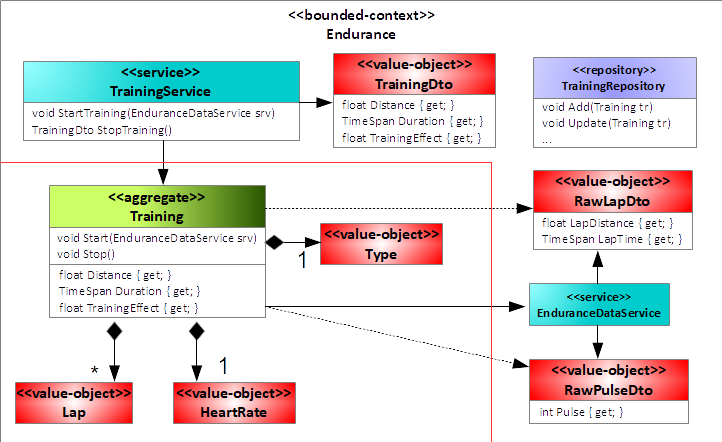

Next, you define an anti-corruption layer for the new domain model. This involves two things. First, you define data-transfer objects wherever necessary to protect the model, and second, you implement facades to hide from legacy systems. In the example, this means:

- You define data-transfer objects and services for accessing the domain model to protect it from the applications that use it. For this example, you introduce the class TrainingService offering both StartTraining and StopTraining methods. The StopTraining method returns a TrainingDto data-transfer object summarizing the training for the application.

- You define data-transfer objects for the communication with the peripheral via the class EnduranceDataService. The class EnduranceDataService will then be in charge to convert to these data-transfer objects after gathering the values from the peripheral.

Figure 14 shows the result after this step.

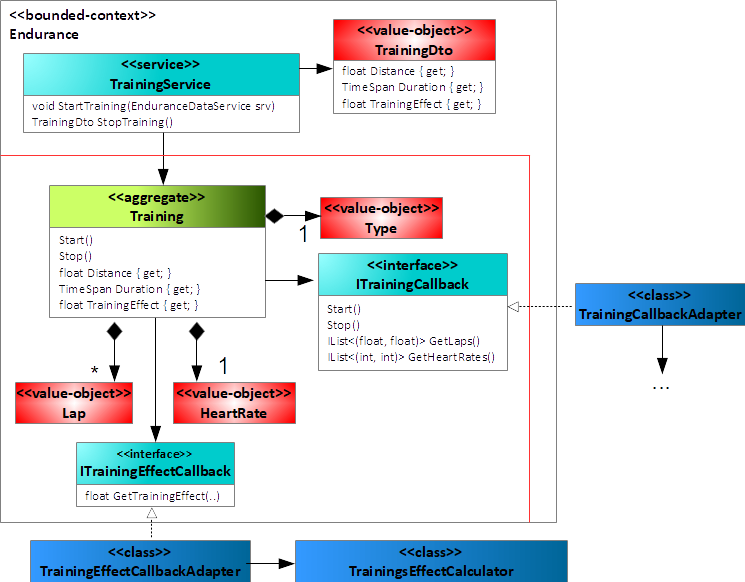

You move the business logic now in two steps: First you migrate to an anemic domain model (excluding the outbound facade and data-transfer objects for them), and then you migrate to a rich domain model. What does it mean to migrate first to an anemic domain model? It means that you migrate the structure and properties of the new domain model first without migrating the methods and dynamics. You easily achieve this by introducing “callbacks” (essentially C# interfaces) to the legacy code base that abstract the business logic to determine some properties. Implementations of these callbacks reside within the legacy code base.

For example, the property TrainingEffect of the new domain model in Figure 14 depends on the business logic that the class TrainingsEffectCalculator of Figure 12 implements. To access this business logic within the new domain model, you introduce the interface ITrainingEffectCallback with the method GetTrainingEffect. Similarly, the training aggregate uses the interface ITrainingCallback to start and stop the training, but also to get the data for the laps and the heart rate, see Figure 15.

Before you migrate and start coding, you need to choose which platforms the framework should support. Because you want to support multiple platforms, the choice is between .NET Standard and .NET 5. If you further consider mobile phones as target platform, you choose .NET Standard as TFM for the bounded context assemblies to stay compatible with Xamarin forms.

Importantly, note that after this step, you have a running software product that implements a clean structural model. That's great, because even though you only completed half of the migration (implementation-wise), you're still able to deliver to your customer. But the more valuable outcome, certainly, is that the structure of the “refactored” bounded context aligns with the structure of the domain it supports, and you already brought the bounded context into the shape that the multi-platform software product framework demands. Finally, you migrate the anemic domain model to the one of Figure 13 by removing all the callback interfaces from the bounded context and moving the business logic from the legacy code base. This last step completes the migration. After that step, you again have a running software application that you deliver to your customer.

Applying these steps continuously to all the business logic of an entire application smoothly migrates the whole software to a multi-platform software product line framework, thanks to the use of .NET Standard as TFM. You can be a bit more precise and use .NET 5 at some points.

Anti-Corruption Layers in .NET 5

One of the requirements of the multi-platform software product line framework was that, in principle, applications should run on any platform. Simply using .NET 5 as TFM for the bounded context assemblies will, unfortunately, not do the trick for you, because it lacks full support of mobile apps built with Xamarin forms for example. I also don't want to push into the use of .NET 5 too much, because the goal is still the reuse of assemblies rather than jumping on new technologies.

However, at some point, it makes sense to apply .NET 5, like for the anti-corruption layers for example. Because these layers are small and usually contain very little code, it makes sense to split this part of the bounded context implementations apart and offer them as NuGet packages to your application developers. This has one big advantage: You can choose to implement the anti-corruption layer as a .NET Standard assembly and .NET 5 assembly. In the .NET 5 assembly, you can use C# 9 as language, whereas in .NET Standard, you still use C# 8 (with .NET Standard 2.1).

Ready, Set, Eat!

After successfully migrating your business logic to a multi-platform software product line framework, you easily compose new applications out of it. Whether you need to develop a mobile application, a desktop application, or a cloud service, all of them rely on the same framework. Because your framework uses .NET Standard, it supports all of these platforms.

Using such a modular framework also results in a straightforward decomposition of the user interface of an application. The structure of the framework somehow naturally also imposes a decomposition into components on the front-end. Which pattern, or which technologies, these front-end components use, you can freely choose. How should you deal with legacy front-ends that you want to migrate in the long term?

The framework offers a way out: After migrating the business logic that the front-end relies on, you also migrate the front-end. Ideally, such a migration affects only some views, or some controllers, the ones that essentially the user requires to interact with the business logic just migrated. To keep things clean, you place the new views into a dedicated assembly.

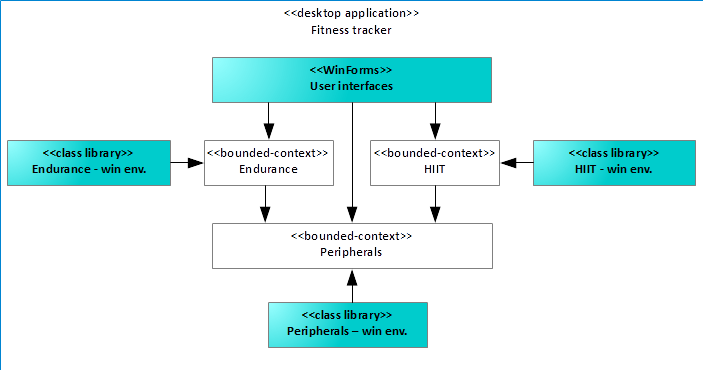

Suppose, for example, the Fitness Tracker desktop application was written using WinForms a long time ago, as Figure 16 illustrates. Now, after refactoring to a multi-platform software product line framework, the product owner recognizes the value of refactoring as key enabler for new applications and grants the refactoring toward WPF.

Well, obviously the Fitness Tracker application for the desktop is in bad shape, because all user interfaces reside within one huge monolithic WinForms component. The user interface assembly accesses the individual bounded contexts to deliver value to the user of the application. The user interface still uses WinForms rather than WPF, maybe even an outdated .NET version as well. It's awkward somehow, because you now have a clean and nice business logic, but the user interface still resides in the middle-ages. There is a way out.

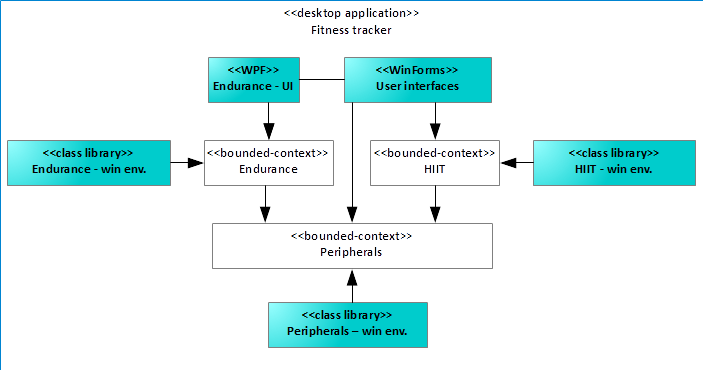

What about doing it stepwise? You start with the first part your customers care most about: the endurance part of your application. In that case, you first migrate the views of the endurance part to WPF into a WPF assembly. For that purpose, you use the TFM .net5-windows for example, because the business logic builds upon .NET Standard with an anti-corruption layer in .NET 5. Of course, where the new views must interact with the legacy user interface, you need to write some interaction logic, which boils down to additional efforts. The effort pays off, as you'll see soon. After extracting the endurance part of the application, the application looks like Figure 17.

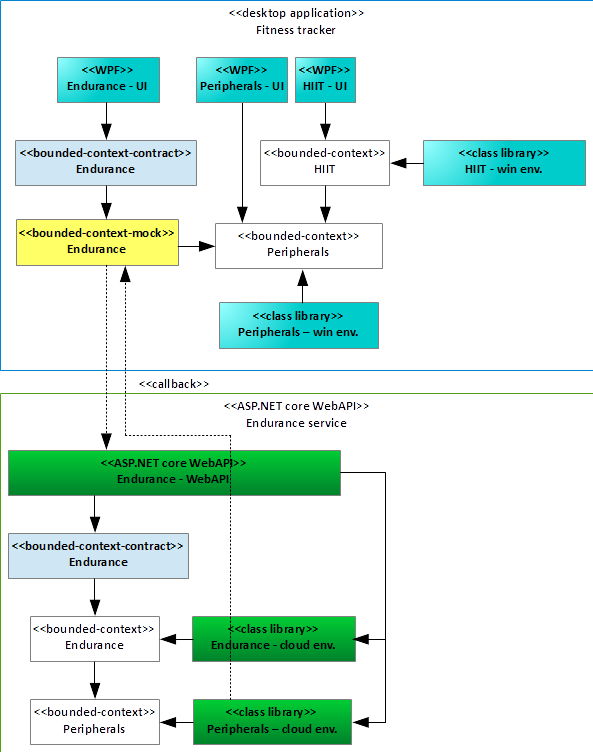

Applying this strategy repeatedly leads to a modular application. But there's even more, a final thought I want to point you at. Suppose you want to host the endurance part of your application in the cloud but keep the HIIT bounded context running locally. This is an interesting thought, especially when people want to compare their endurance results online.

With the design of Figure 17, you're in the right shape for this: For all applications containing the Endurance bounded context, you substitute the Endurance bounded context with an assembly that looks and smells like the original Endurance bounded context, i.e., it offers the same public interface as the original bounded context. When consuming one of the services the public interface of the bounded context specifies, a “service mock” bounded context invokes a network call to a cloud service offering the Endurance bounded context. Technically, you achieve this by splitting the bounded context into two parts: a contract part (containing the public interface to interact with the bounded context) and an implementation part. The implementation part references the contract assembly and implements the public interface that it specifies. All clients of the bounded context also reference the contract's assembly, never the implementation. This keeps the client using the bounded context independent of the implementation of it. In other words, the client of the bounded context doesn't depend on a concrete implementation thereof but rather on the interface it offers. Importantly, it implies for the user interface assembly “Endurance–UI” (WPF) that it doesn't matter at all if you handle invocations to the bounded context Endurance locally or remotely because it only depends on the contracts of the Endurance bounded context. You can even use .NET 5 for this “service mock” bounded context as well as to the ASP.NET Core WebAPI (I've chosen to use this one as implementation technology). This framework choice appears very convenient because you can use Records to define the data-transfer objects between “service mock” bounded context and an ASP.NET Core 5.0 WebAPI project. Figure 18 summarizes the architecture of the overall system.

By introducing a “contract” assembly that characterizes the public interface of the bounded context, you can exchange the implementation of a bounded context without breaking an existing client. Importantly, you may even run the business logic somewhere else entirely.

In the same fashion, you also let other applications from other platforms connect to your Endurance service. Just implement the corresponding, potentially platform-dependent, “service mock” bounded context, and communicate with your new .NET 5 Endurance service.

Code Example

In the GitHub repository https://github.com/apirker/FitnessTracker, I provide an example of the fitness tracker domain which I presented here for you to explore the techniques and ideas of this article.

Summary

The techniques I presented in this article help you to slowly evolve your application to a multi-platform software product line framework. How did I achieve this? A short recap: You combine the ideas of the ports-and-adapters architectural style with domain-driven design to develop or migrate to pure business logic assemblies. Each of these assemblies supports one sub-domain, called a bounded context. Pushing out all environmental implementations from the bounded context gives you platform independence if your implementation targets .NET Standard. When you start on a green field, you can apply these techniques directly. However, when you must maintain a legacy code base, you migrate slowly to this architectural style by introducing anti-corruption layers, where necessary, to keep your new domain models clean and isolated. That, in turn, ensures that you don't pollute your new models that emerge from the migration. The process ultimately leads you to the same goal: a multi-platform software product line framework.