My first experience with AWS was building a prototype for a website called Attachments.me. My friend Jesse Miller and I built the site over several weekends, and hosted it on a single EC2 instance. Two years, dozens of EC2 instances, and hundreds of thousands of users later, we’re still on AWS.

AWS by Example

AWS provides over thirty distinct services, spanning many of a web developer’s infrastructure needs. Rather than discuss each of these services, which would make for a long and boring article, I wanted to frame this piece around a toy project.

I set out to build a hosted asset pipeline. An asset pipeline performs pre-processing on your website’s resources. As an example, it might convert Sass (an abstraction on top of CSS) into compiled CSS assets.

I wanted the toy project to showcase several of the AWS offerings that I find the most useful:

- Route53, provides the domain name system

- ELB (Elastic Load Balancer) distributes traffic to the web servers

- EC2 (Elastic Compute Cloud) hosts the web servers, and runs the asset compilation processes

- S3 (Simple Storage Service) stores the compiled assets

- SQS (Simple Queue Service) distributes the asset compilation work

- SNS (Simple Notification Service) notifies servers when assets have been compiled

High-Level Functionality

Before diving into the implementation details, here’s a high-level overview of what the asset pipeline looks like.

The Anatomy of a Web Request

- An individual accesses a website with a CSS resource pointing to my asset pipeline’s servers.

- The servers check for the asset in S3: If the resource exists, it is immediately returned in the HTTP response; if the resource does not exist, it is assumed that that asset compilation must take place, a message is dispatched on SQS and the HTTP connection is stored in an idle state.

- When compilation work is complete, a message is received on SNS, and the resource is returned on the stored HTTP connection.

Asset Compilation

- A compilation worker receives a request for work over SQS.

- An un-compiled Sass file is retrieved from the origin server (this origin server is specified by an individual hosting their assets on our servers).

- Sass compilation is performed and the compiled file is uploaded to S3.

- A message is dispatched on SNS to indicate to the web servers that the compilation step has completed.

Hello World!

Here’s a breakdown of how this highly-scalable architecture was built in a few manic weekend hacking sessions.

1. Setting up the DNS and Load Balancer

For my cloud-based asset compiler, I purchased the domain name smartassets.io. Domain name acquired, the AWS-specific work could begin.

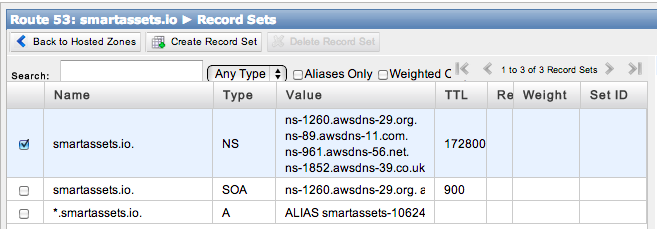

The first step was to get the domain registrar pointing to Amazon’s Route53 name servers. From the Route53 Management Console, I created a new Hosted Zone called smartassets.io. Clicking on this new entry displays a list of Amazon’s name servers. I swapped out the default name servers pointed to by my registrar with this delegation set.

Before setting up the EC2 instance for serving web requests, I wanted to get an Elastic Load Balancer up and running. An ELB provides a mechanism for distributing traffic to a set of registered EC2 instances. It’s crucial to have this in place for when it comes time to scale your website. Adding it after the fact is more painful than setting it up out of the gate.

In the EC2 Management Console, I created a new ELB on port 80 called smartassets. Having done this, I went back to the Route53 Management Console, clicked the checkbox next to smartassets.io, and selected Go to Record Sets (Figure 1). From this listing, I created a new wildcard A-Record selecting the alias option and I pointed this record at the smartassets ELB.

2. Getting Our Web Server Up and Running

DNS and ELB configured, it was time to spin up an EC2 instance to host the website. You create an EC2 instance from an Amazon Machine Image (AMI). The AMI you choose is a matter of preference. Mine is the Ubuntu flavor of Linux. Canonical, the maintainer of Ubuntu, has official images to choose from at cloud-images.ubuntu.com. Handy!

Booting an AMI running the Oneiric Ocelot release of Ubuntu, I connected to it via SSH and installed the packages required for my web server. As with choosing an AMI image, there’s a lot of flexibility as to what web server you choose to install: Sinatra, Flask, Express, Django, ASP.NET… the sky’s the limit. I opted to use Node.js for the smartassets.io server. Here’s what the start of my server looked like:

http.createServer(function (req, res) {

if (req.url === '/health') {

res.writeHead(200, {'Content-Type': 'text/plain'});

res.end("Hello World!\n");

}

}).listen(process.env.ASSET_PIPELINE_PORT, '0.0.0.0');

I ran this script in the background using Node.js and Upstart.

The proverbial Hello World! up and running, I navigated to the Load Balancer option in the EC2 Console. Clicking on the Instances tab, I added the newly-created web server to the list.

As long as the server returns the HTTP status of 200 OK it will be kept in the load balancer.

Implementing the Asset Pipeline

The Hello World! a resounding success, I started implementing some of the more advanced functionality.

1. Setting up SQS and SNS and S3

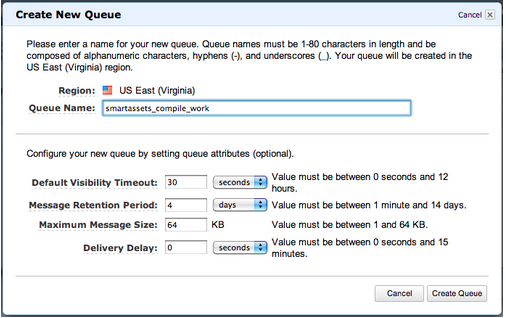

From the SQS Management Console, I created a new queue named smartasset_compile_work (Figure 2); the default settings were sufficient. When asset compilation is necessary, the web servers simply dispatch a JSON serialized job on this queue. Stateless background workers consume from this queue using long-polling and perform the heavy lifting.

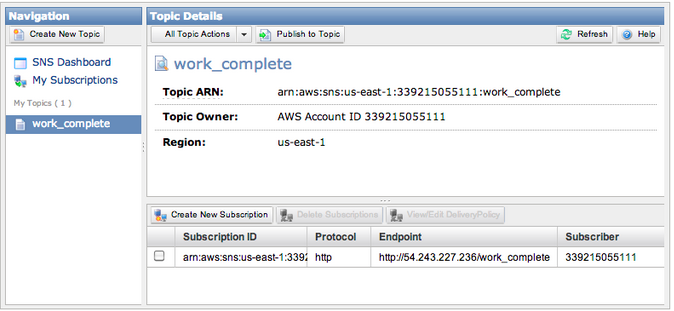

From the SNS management console, I created a new topic called work_complete. All servers subscribed to a topic receive notifications when a message is published to it. I added the /work_complete endpoint of our web server as a new http subscriber (Figure 3). When background workers finish their compilation work, they will publish to the work_complete topic, notifying the web servers.

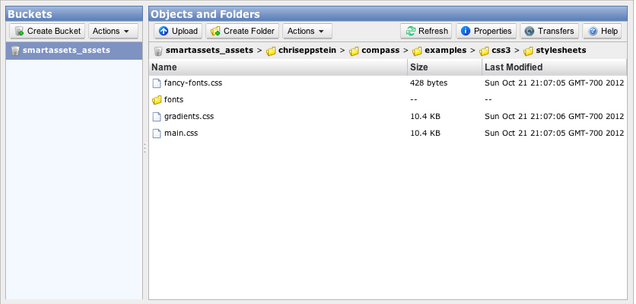

From the S3 management console I created a bucket named smartassetsio_assets (Figure 4). Compiled assets will be placed in this bucket by the background workers.

2. The Asset Server

Next, having configured the AWS specifics, I could extend the original Hello World! server.

Using the path portion of the HTTP request, it attempts to lookup the resource in S3. If the resource does not exist in S3, it’s assumed that it needs to be compiled. A request for this compilation work is dispatched on the smartasset_compile_work SQS queue. As compilation work is completed, the web server receives SNS notifications via the work_complete queue, and completes the original HTTP request. See Listing 1.

3. The Background Worker

The background worker runs independently of the web server and performs the actual compilation of assets (Listing 2):

- It reads messages from the smartasset_compile_work SQS queue.

- Downloads the Sass files that require compilation.

- Runs a Sass compiler on the files.

- Indicates to the web server that work is complete by publishing a message to the work_complete SNS topic.

In total it took about two weekends of hacking to cobble together this scalable architecture on AWS. In my opinion, not bad.

Planning for the Future

Let me review some of the valid arguments in favor of migrating away from cloud-based infrastructure as you scale.

Cost

Dollar for dollar, you get more computing power when you buy your own servers rather than relying on cloud-based infrastructure.

The benefits that come with cloud-based infrastructure can offset the cost savings associated with purchasing your own hardware: you can elastically provision hardware to match variable needs; you don’t need to deal with the hassle of maintaining physical hardware; metered APIs can abstract away what were once painful scaling problems.

Add to this that Moore’s Law marches forward; there have already been several price decreases during my time on AWS. This trend will continue.

Control

Cloud-based infrastructure takes a major part of your architecture out of your control. AWS has notoriously suffered from several outages on the east coast in the past year. Personally, I’ve spent a few overnighters dealing with these.

AWS’s Service Level Agreement guarantees 99.95 percent uptime. A big part of the alarmism surrounding AWS’s outages relates to the fact that such large swaths of the Internet are now hosted on them (reportedly, one-third of the Internet visits an AWS-hosted site daily). AWS houses data centers in multiple regions; none of the recent outages have spanned multiple regions. As with hosting your infrastructure in any traditional data center, redundancy is important.

Vendor Lock-In

AWS’s higher-level APIs are proprietary; this can make migrations to other vendors difficult.

Building clean abstractions in front of AWS’s various proprietary services (SQS, SNS, etc.) can go a long way towards mitigating the dangers associated with vendor lock-in.

The Future of Smartassets.io

AWS’s hosted infrastructure helped to easily create a decoupled scalable architecture. Let’s assume that smartassets.io suddenly had to scale to several million users.

The use of the ELB allows us to horizontally scale the web severs. By using SQS and SNS to decouple the two major parts of the system (asset serving and asset compilation) these components can be scaled independently. Using S3 for storage allows us to painlessly store billions of assets.

AWS allows you to scale from a rough prototype, like smartassets.io, to a website with millions of users. It allows you to do so with far fewer physical and conceptual hurdles than ever before, enabling smaller teams to tackle difficult problems, at much higher scales; success stories like Instagram do a wonderful job of demonstrating this. This belief makes me a rabid advocate of cloud-based infrastructure like AWS.