Resource management is one of the most important concepts when working on applications built using .NET Core or .NET Framework. In this article, I'll cover the core concepts of resource management in .NET Core, and the features and benefits, with code examples to illustrate the concepts covered.

If you're to work with the code examples discussed in this article, you need the following installed in your system:

- Visual Studio 2022

- .NET 7.0

- .NET 7.0 Runtime

If you don't already have Visual Studio 2022 installed in your computer, you can download it from here: https://visualstudio.microsoft.com/downloads/.

In this article, you'll learn how resource management works in .NET Core and the best practices and guidelines to make optimal usage of resources in a .NET Core application. I'll examine the following points:

- An overview of garbage collection and how it works

- Understanding Server GC and Workstation GC

- The components of .NET Core Framework

- Working with disposable objects

- The Dispose/Finalize pattern

- How to set up a console application to benchmark performance

- Understanding the large object heap (LOH)

- Best practices of resource management in .NET Core

From .NET Framework to .NET Core to .NET 7

.NET Framework has been around for over two decades. A subset of .NET Framework, .NET Core is designed to be modular, lightweight, cross-platform, high-performance, and scalable. A .NET Core application can be executed on Windows, Linux, and macOS operating systems. Applications built using .NET Framework only work on Windows OS. You can take advantage of the .NET Upgrade Assistant to migrate your .NET Framework applications to .NET 7.

What's Garbage Collection?

.NET Framework and .NET Core includes garbage collection as a built-in feature for cleaning up memory occupied by managed objects. You can create managed and unmanaged objects when working in .NET Framework or .NET Core.

Although managed objects are created in the managed heap and removed from the managed heap during a garbage collection cycle, you'd need to write code to delete unmanaged objects explicitly. Garbage collection is a strategy adopted by the CLR to clear the memory occupied by managed objects that have become “garbage.”

In most cases, you need not clean them in your code. The runtime will do this for you. You can facilitate garbage collection by setting an object to “null” if you no longer need it, to help the runtime know that the memory occupied by the object can be relinquished to accommodate other objects.

An object becomes “garbage” when one or more of the following is true:

- No references to the object exist.

- The instance is not reachable from the root.

- The object is no longer inside the scope in which it was initially created.

- Your application has invoked the

GC.Collect()method.

If any of the above holds true, the garbage collector cleans an object during the next garbage collection cycle. Objects that cannot be reached from the root or are no longer needed by your application are candidates for garbage collection. The garbage collector disposes of an object that has no references. When you set an object to null, the object becomes ready to be garbage collected. When the application explicitly calls the GC.Collect() method or garbage, collection is performed when the system runs out of physical memory.

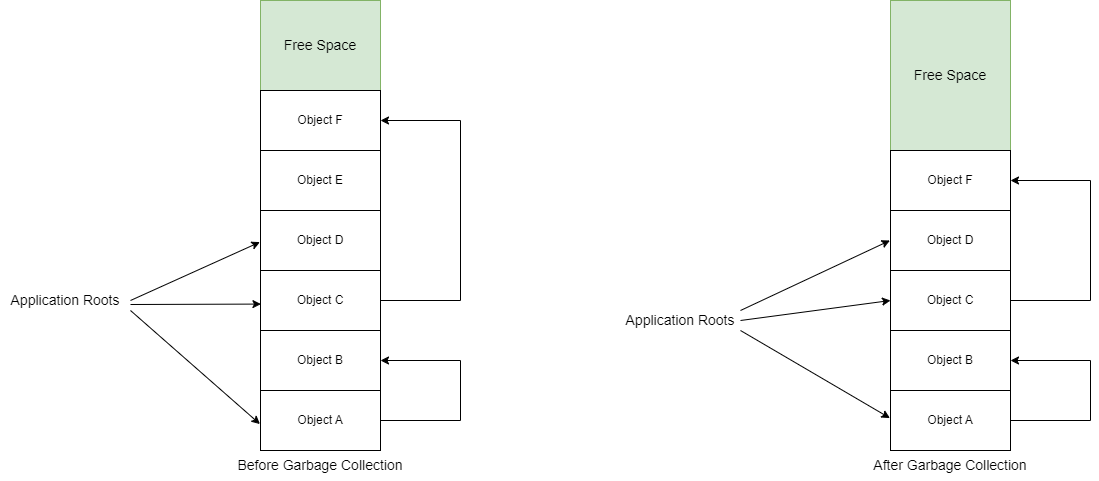

How Does the Garbage Collector Work?

The garbage collector works in these phases:

- Mark: In this phase, the garbage collector identifies the objects that are reachable and builds a list of all reachable or live objects.

- Relocate: At this point, the garbage collector updates the references to the instances that will be compacted.

- Compact: In this phase, the garbage collector recovers or reclaims the memory occupied by the unreachable objects and compacts the memory. This ensures that the available or free memory is contiguous.

Note that the LOH (large object heap) isn't compacted because copying large objects would incur a performance cost. To determine reachable or live objects, the garbage collector uses the following information:

- Stack roots

- Garbage collection handles

- Static data

Garbage Collector works when the application explicitly calls the GC.Collect() method or a garbage collection is performed when the system runs out of physical memory. If an object is no longer needed or is unreachable from the root, it's a good candidate for garbage collection. The garbage collector disposes of an object that has no references.

What Are Server GC and Workstation GC?

.NET Core supports two garbage collection modes: workstation GC and server GC. The optimization of garbage collection depends on the particular application or workload and, therefore, requires the implementation of different modes. The default garbage collection mode used by most .NET Core applications is the workstation GC, specifically tailored for client applications, including Windows Forms, WPF, and console applications.

Server Garbage Collector (GC) or Server GC, as it is called, is specifically designed for performance-critical server applications running on multi-core CPUs. Because Server GC mode isn't enabled by default, activate it in the application settings file.

You can set the GC mode in the project file or in the runtimeconfig.json file. The following code snippet shows how you can turn on Server GC for your ASP.NET Core application.

<PropertyGroup>

<ServerGarbageCollection>true</ServerGarbageCollection>

</PropertyGroup>

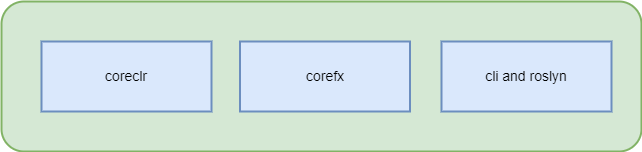

Components of .NET Core Framework

The key components of .NET Core include the following: CoreClr, CoreFx, and CLI and Roslyn.

CoreClr

This represents the .NET Core runtime environment that provides support for services such as garbage collection, assembly loading, etc. It also consists of the Just-In-Time (JIT) compiler responsible for compiling intermediate code (IL) to machine executable code at runtime.

CoreFx

This is analogous to Base Class Library (BCL) of .NET Framework and represents the foundational class libraries of .NET Core that provides support for services that include serialization, networking, IO, etc.

CLI and Roslyn

The Command Line Interface (CLI) in .NET Core is a cross-platform command-line tool for developing, building, executing, and publishing applications. Roslyn represents an open-source implementation of C# and Visual Basic compilers.

Figure 2 shows the key components of .NET Core Framework.

Managing Resources in .NET Core

In .NET Core, the heap is of two types: the managed heap and the unmanaged heap. The CLR releases the memory occupied by the managed objects once they're no longer in use. Although the CLR is capable of releasing managed memory and resources, it's less adept at freeing unmanaged resources. The CLR reserves memory on the managed heap for managed objects that remain on the managed heap until no longer referenced by your code. Resource management in .NET Core entails creating a new resource and releasing resources that are no longer in use or not needed by the application anymore. Typical examples of resources are database connection objects, file handles, sockets, objects of framework classes, and objects of custom classes.

Resources in .NET Core can be of two types: managed and unmanaged. Although a managed resource, such as a string object or any instance of a class, is created in the managed heap, an unmanaged resource like a file handle or a database connection object is not. The runtime is adept at releasing the memory occupied by the managed objects. However, because unmanaged objects are created outside the managed environment of the CLR, there's no way to release those objects implicitly.

To be more precise, although the garbage collector is capable of tracking and monitoring the lifespan of an object that contains an unmanaged resource, it's unable to release the memory used by this unmanaged resource. You should release such objects explicitly in your code.

What Are Resources in .NET Core?

In the context of the CLR, the term a “resource” refers to objects that encapsulate access to the file system, network connection, database connection, etc. Resources in the context of the CLR can be of the following types:

- Memory resources that include both managed memory and unmanaged memory

- External resources such as file handles, socket connections, database connections, etc.

Allocating Memory

When your application creates a new object, the .NET Core runtime allocates a contiguous chunk of address space in the managed heap. The runtime maintains a pointer to the location in this managed heap where the next object should be allocated. This pointer is initially assigned the value of the base address of the managed heap. When the first occurrence of a reference type is instantiated, the object is assigned memory at the primary location of the managed heap.

When an application creates a new instance of a class, the runtime reserves memory space for it analogous to the first object, that is, it immediately follows the first object. This procedure persists for all future objects, meaning that the runtime adheres to the same approach of assigning memory for new objects if there's space in the managed heap. Note that memory allocation in the managed heap is faster than in the unmanaged heap.

The runtime system reserves memory for an object by incrementing a pointer and newly allocated objects are kept in a contiguous memory area inside the managed heap, thus allowing your application to access those objects more efficiently.

De-Allocating or Releasing Memory

The optimizing engine of the garbage collector can determine the perfect time to run a garbage collection cycle based on the data it collects. During the garbage collection cycle, memory that is occupied by objects no longer required by the application is released. To determine which objects aren't needed any more by the application, the garbage collector examines the application's root, which includes local variables, static fields, GC handles, CPU registers, and the finalization queue.

Each root either references an object or is set to null if no such reference exists. The garbage collector makes use of these objects to create a graph of instances that it can access from the roots. It should be noted that the instances that aren't present in this graph are the ones that cannot be accessed from the roots. These instances are therefore considered “garbage objects.” When the next garbage collection cycle is executed, these objects will be the ones to be collected.

Strong and Weak References

It's important to understand that strong and weak references in .NET Core (and in .NET in general) are mechanisms for managing object lifetimes and memory management of objects, and they play a key role in garbage collection and memory optimization. When you create an object reference in .NET Core, that is, instances of classes, it creates a strong reference to the object. Remember that a strong reference keeps an object alive as long as the reference to the object is reachable.

The garbage collector cannot free the memory occupied by objects that have strong references to them. The following code snippet illustrates how you can create a strong reference to an object:

MyClass strongReference = new MyClass(); // Strong reference

A weak reference is one that refers to an object in the memory while at the same time allowing the object to be garbage collected during a garbage collection cycle. Typical use cases of weak references are caching scenarios and objects that are long-lived. The following code snippet shows how you can create a weak reference to an object:

MyClass strongReference = new MyClass(); // Create a strong reference

WeakReference<MyClass> weakReference = new WeakReference<MyClass>(strongReference); // Create a weak reference

Once the weak reference is created, you can use the IsAlive property of the weak reference instance to check whether the object is still alive. If the object is still alive, you can retrieve the object using the Target property of the weak reference instance:

if (weakReference.IsAlive) //Check if the object is still alive

{

// Access the object if it's still alive

MyClass targetObject = (MyClass)weakReference.Target;

targetObject.CallSomeMethod();

}

Note the following points about weak references:

- Weak references do not increase an object's lifetime.

- Weak references only enable the garbage collector to free the memory occupied by an object that doesn't have any strong references.

- When a weak reference is created, the runtime stores an

IntPtrto aGCHandle. - This

GCHandleis used by the runtime to manage a table that consists of a list of weak references. - The value of

IntPtrwill be zero if the object has already been garbage collected. - When the object has been garbage collected, the corresponding entry of the weak reference to the same object in the weak reference table is deleted.

- If an object is alive, you can access the actual object pointed to by the weak reference using its

Targetproperty. - You should create weak references only for small objects because the pointer itself is large.

Weak references can be either short-lived or long-lived. The Target property of a short-lived weak reference becomes null when the object it points to is garbage collected. On the other hand, a long-lived weak reference persists even after a garbage collection cycle has executed because it's cached.

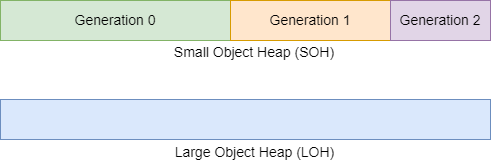

Generations

Garbage Collector is a part of the CLR that acts as an automatic memory manager in .NET Core. The managed heap is logically divided into generations: generation 0, generation 1, and generation 2. A generation represents the relative lifetime of an object in the memory. The generation number is an integer that represents the generation to which a particular object belongs. The objects in generation 0 are short-lived, i.e., those that remain in memory for a short time. Figure 3 shows how the generations are represented.

It's important to note that objects outliving a GC cycle in generation 0 are promoted to generation 1. Likewise, objects that survive a generation 1 collection, i.e., those not collected in a generation 1 collection, are promoted to the next higher generation, i.e., generation 2. Compared to the higher generations, the garbage collector works more often in lower generations. This explains why short-lived objects are cleaned much more frequently than long-lived ones.

Working with Disposable Objects

In .NET Core, disposable objects are those that have a Dispose method defined on the class to which the objects belong. There are several ways to work with disposable objects in .NET Core.

Disposing Using the IDisposable Interface

The System.Idisposable interface contains the Dispose method, as shown in the code snippet below:

public interface IDisposable

{

void Dispose();

}

If your class contains unmanaged resources, implement the Idisposable interface and write your own implementation of the Dispose method to release unmanaged resources used by your class. Consider the following code snippet that illustrates how you can implement the Dispose method:

public class DisposableExample : IDisposable

{

public DisposableExample()

{

Console.WriteLine("An instance of {0} was created.", GetType().Name);

}

public void Dispose()

{

Console.WriteLine("The instance of {0} was disposed.", GetType().Name);

}

}

You can now invoke the Dispose method, as shown below:

DisposableExample disposableExample = new DisposableExample();

disposableExample.Dispose();

Disposing Using the “using” Keyword

The using statement in C# provides a convenient way to release unmanaged resources by calling the Dispose method when the object is not needed by your application anymore or is no longer being referenced in the application's code. When the control exits the using block, either because the execution of the statements in the block has been completed or an exception is thrown, the Dispose method is called on the handler object.

Basically, the using keyword is used to define a scope beyond which an object is disposed. You can use the using keyword on any class that implements the IDisposable interface. The following code snippet shows how the using keyword is used:

using (DisposableExample disposableExample = new DisposableExample())

{

// Write your code here to call your methods

// on the disposableExample instance

}

// The disposableExample instance is automatically

// disposed of when the control reaches here

Listing 1 illustrates a simple file manager that can be used to write a line of text in a file. It shows how the Dispose method can be used to release unmanaged resources in a class. You can now use the following piece of code to write a line of text asynchronously in a file using the CustomFileManager class. Note that because of the using statement, the Dispose() method is called implicitly when the control leaves the scope in which the using statement has been used.

Listing 1: The CustomFileManager Class

public class CustomFileManager : IDisposable

{

private FileStream fileStream = new FileStream(@"D:\Test.txt",

FileMode.Append, FileAccess.Write, FileShare.None, 4096, true);

public async Task FileWriteAsync(string data)

{

try

{

using (StreamWriter streamWriter = new StreamWriter(fileStream))

{

await streamWriter.WriteLineAsync(data);

}

}

catch

{

throw;

}

}

void IDisposable.Dispose()

{

Console.WriteLine("Inside IDisposable.Dispose()");

if (fileStream != null)

{

fileStream.Dispose();

}

}

}

using (var customFileManager = new CustomFileManager())

{

await customFileManager.FileWriteAsync("This is a text message");

}

When you use the

usingstatement, theDisposemethod is automatically called. It's called even if an exception has occurred.

Disposing at the End of the Request

When working in a web application built using ASP.NET Core, you might often want to dispose of resources at the end of a request. You can do this using the HttpContext.Response.RegisterForDispose method by passing the instance you'd like to be disposed to this method as a parameter, as shown in the code snippet given below:

public class HomeController : Controller

{

private readonly DisposableExample _disposableExample;

public HomeController()

{

_disposableExample = new RegisteredForDispose();

}

public IActionResult Index()

{

HttpContext.Response.RegisterForDispose(_disposableExample);

return View();

}

}

Disposing Objects Using the Built-In DI Container

ASP.NET Core provides excellent support for dependency injection - it comes with a built-in DI container to register your services. You can register your services in three different ways: Singleton, Transient, or Scoped. If you're using a service that implements the IDisposable interface, it's disposed by the DI container, as appropriate. So, although Transient and Scoped instances of your services are disposed at the end of the request or at the end of the scope, Singleton instances of your services are disposed when the application terminates.

Consider the following classes that represent Transient, Scoped, and Singleton services.

public class TransientService: DisposableExample { }

public class ScopedService : DisposableExample { }

public class SingletonService: DisposableExample { }

You can register these services using the following code snippet:

builder.Services.AddTransient<TransientService>();

builder.Services.AddScoped<ScopedService>();

builder.Services.AddSingleton<SingletonService>();

All of these services are disposed appropriately by the DI container.

Disposing Objects When the Application Shuts Down

You can also dispose objects in your application by hooking into IApplicationLifetime events. To do this, take advantage of the IApplicationLifetime interface in ASP.NET Core that can be used to run code when the application starts up or shuts down. The following code snippet illustrates the IApplicationLifetime interface:

public interface IApplicationLifetime

{

CancellationToken ApplicationStarted { get; }

CancellationToken ApplicationStopping { get; }

CancellationToken ApplicationStopped { get; }

void StopApplication();

}

The Dispose/Finalize Pattern

After calling the Dispose() method on an object, the GC.SuppressFinalize() method should be called to conceal calls to the Finalize method. Note that you should call the GC.SupressFinalize() method provided that the Dispose() method has executed successfully. Listing 2 demonstrates how the Dispose/Finalize pattern can be implemented.

Listing 2: Demonstrating the Dispose/Finalize Pattern

public class DisposableExample : IDisposable

{

private bool isDisposed = false;

public DisposableExample()

{

Console.WriteLine("An instance of {0} was created.", GetType().Name);

}

public void Dispose()

{

Dispose(true);

GC.SuppressFinalize(this);

}

protected virtual void Dispose(bool disposing)

{

if (!isDisposed)

{

if (disposing)

{

// Write your code here to dispose managed resources

}

// Write your code here to dispose unmanaged resources

isDisposed = true;

}

}

~DisposableExample()

{

Dispose(false);

}

}

Consider a class that encapsulates an instance of a database connection. You can implement the IDisposable interface in this class and provide your own implementation of the Dispose method to release memory resources held by an instance of this class. When the developer fails to call Dispose, the finalize method can be used as a backup to prevent permanent resource leaks.

Listing 3 demonstrates how you can create a custom database manager class that implements the IDisposable interface. You can use the DbManager class, as shown in the code snippet below:

Listing 3: The DbManager Class

public class DbManager<T> : IDisposable

{

private bool isDisposed = false;

private readonly string? connectionString = null;

private SqlConnection? sqlConnection = null;

public DbManager()

{

connectionString = "Write your db connection string here.";

if (sqlConnection == null)

sqlConnection = new SqlConnection(connectionString);

}

public void InsertData(T item)

{

// Write your own code here to insert data

}

public void Dispose()

{

Dispose(true);

GC.SuppressFinalize(this);

}

protected virtual void Dispose(bool disposing)

{

if (!isDisposed)

{

if (disposing)

{

if (sqlConnection != null)

{

sqlConnection.Dispose();

}

}

}

isDisposed = true;

}

}

using(DbManager<Customer>

dbManager = new DbManager<Customer>())

{

Customer obj = new Customer();

obj.Id = 1;

obj.FirstName = "Joydip";

obj.LastName = "Kanjilal";

obj.Address = "Hyderabad, India";

dbManager.InsertData(obj);

}

Avoid using finalizers for your class because finalizers are non-deterministic, meaning that execution of finalizers is not guaranteed, and objects of types that have finalizers are long-lived.

If you need to invoke the GC.Collect() method in your code, you must call the GC.WaitForPendingFinalizers() method after the call to the GC.Collect() method. This ensures that the currently executing thread waits until the finalizers for all objects in your application have been executed. The following code snippet shows how you can do this:

System.GC.Collect();

System.GC.WaitForPendingFinalizers();

System.GC.Collect();

Points to Remember

When implementing disposable types, keep these points in mind:

- Every type with a finalizer should implement

IDisposableafter callingDisposeto ensure that the object is unusable. - Avoid using an object on which the

Dispose()method has already been called. - When you're done with an

IDisposabletype, call theDispose()method. - Allow multiple calls to the

Dispose()method without raising errors. - Leverage the

GC.SuppressFinalize()method to suppress subsequent finalizer calls within theDispose()method. - Do not create value types that are disposable. Throwing exceptions within

Disposemethods is not recommended.

Understanding the Large Object Heap (LOH)

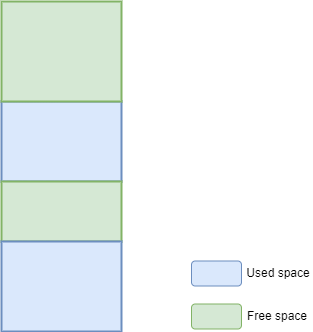

There are two types of managed heap in .NET Framework and .NET Core: Small Object Heap (SOH) and Large Object Heap (LOH). Although the garbage collector is adept at cleaning instances that are no longer needed by the application, the SOH and LOH work differently. Objects that are less than 85KB in size are stored in the SOH. The garbage collector stores large objects, that is, those that are more than 85KB in size, in the LOH.

When a small object is created, the garbage collector allocates it in the SOH. It should be noted that the SOH is, in turn, organized into three generations, namely, generation 0, generation 1, and generation 2. When a garbage collection cycle runs on the SOH, the garbage collector cleans up the memory occupied by the unused objects and compacts the memory to eliminate fragmentation. Compaction of memory in the SOH incurs CPU cycles and usage of additional memory. However, the benefits of compaction outweigh the associated costs.

The garbage collector doesn't perform a compaction of the LOH because the cost of moving large objects around in the LOH or compaction in the LOH is very high. The garbage collector doesn't move large objects in the LOH. Instead, it removes the memory occupied by large objects in the LOH when they are no longer in use. As a result, over time, memory holes are created in the LOH or the memory becomes fragmented. The performance and scalability of your application are adversely affected by memory fragmentation. Figure 4 demonstrates how memory fragmentation occurs in the LOH.

Although the garbage collector doesn't compact the LOH, you can still compact the LOH explicitly using the following piece of code:

GCSettings.LargeObjectHeapCompactionMode = GCLargeObjectHeapCompactionMode.CompactOnce;

GC.Collect();

Getting Started: Benchmarking Performance

Let's examine the resource consumption of objects used in an application by benchmarking the performance of the application. First off, let's create a console application to write benchmark tests in Visual Studio 2022.

Create a New Console Application Project in Visual Studio 2022

Let's create a console application project that you'll use for benchmarking performance. You can create a project in Visual Studio 2022 in several ways. When you launch Visual Studio 2022, you'll see the Start window. You can choose Continue without code to launch the main screen of the Visual Studio 2022 IDE.

To create a new console application project in Visual Studio 2022:

- Start the Visual Studio 2022 IDE.

- In the Create a new project window, select Console App, and click Next to move on.

- Specify the project name as ResourceDemo and the path where it should be created in the Configure your new project window.

- If you want the solution file and project to be created in the same directory, you can optionally check the Place solution and project in the same directory checkbox. Click Next to move on.

- In the next screen, specify the target framework you’d like to use for your console application.

- Click Create to complete the process.

You'll use this application in the subsequent sections of this article.

Install NuGet Package(s)

So far so good. The next step is to install the necessary NuGet package(s). To install the required packages into your project, right-click on the solution and then select Manage NuGet Packages for Solution…. Now search for the package named BenchmarkDotNet in the search box and install it. Alternatively, you can type the commands shown below at the NuGet Package Manager command prompt:

PM> Install-Package BenchmarkDotNet

Create a Benchmarking Class

To create and execute benchmarks, here are the steps you should follow:

- Create a Console application project in Visual Studio 2022.

- Add the BenchmarkDotNet NuGet package to the project.

- Create a class having one or more methods that you'd benchmark, and decorate them with the

Benchmarkattribute. - Run your benchmark project in Release mode.

- Invoke the

Runmethod of theBenchmarkRunnerclass in your code. - Run your benchmark project in Release mode.

When benchmarking code using BenchmarkDotNet, run your application in Release mode.

A typical benchmark class contains one or more methods marked or decorated with the Benchmark attribute and, optionally, a method decorated with the GlobalSetup attribute, as shown in the code listing below:

public class BenchmarkExample

{

[GlobalSetup]

public void GlobalSetup()

{

//Write your initialization code here

}

[Benchmark]

public void MyMethod()

{

//Write your code here

}

}

You'll use this class in the sections that follow to benchmark performance.

Resource Management Best Practices

This section examines some of the best practices in resource management in .NET Core applications.

Access Contiguous Memory

Take advantage of Span<T> and Memory<T> to access contiguous regions of memory. Note that Span<T>, Memory<T>, ReadOnlySpan, and ReadOnlyMemory types have been added in the recent versions of C#. You can use these types to work with memory directly in a safe and highly performant matter.

Span is a readonly struct defined in the System namespace, as shown in the code snippet below:

public readonly ref struct Span<T>

{

internal readonly ByReference<T> _pointer;

private readonly int _length;

//Other members

}

Avoid Calling the GC.Collect() Method

Don't call the GC.Collect method in your application's source code because when you invoke the GC.Collect() method, the runtime performs a stack walk to identify the reachable and unreachable objects. As a result, this triggers a garbage collection for all generations, i.e., generations 0, generation 1, and generation 2. Worse, a call to this method also blocks your application's thread until the time the garbage collector completes collection in all the three generations.

Cache Resources

To avoid the cost of re-creating objects and disposing of them, you should cache certain resources whenever possible in your application. By caching objects that are frequently used by your application, you may increase its performance as well as its responsiveness and its capacity to scale. ASP.NET Core supports three forms of caching: response caching, in-memory caching, and distributed caching. Listing 4 shows how you can use memory cache in your ASP.NET Core applications.

Listing 4: The MyCacheController class that leverages the built-in IMemoryCache interface

[Route("api/[controller]")]

public class MyCacheController : Controller

{

private IMemoryCache _memoryCache;

public MyCacheController(IMemoryCache memoryCache)

{

this._memoryCache = memoryCache;

}

[HttpGet]

public string Get()

{

return cache.GetOrCreate<string>("MyKey",

cacheEntry => {

return "This text is cached...";

});

}

}

Avoid Allocations in LOH

In .NET Core, your application creates objects in the managed heap. These objects are destroyed explicitly by the application (or implicitly if the object goes out of the scope) if they're no longer needed. This results in the creation of memory holes in the managed heap. This phenomenon is also known as memory fragmentation. The memory block (where the allocations and de-allocations occurred in the managed heap) should be compacted to eliminate memory fragmentation. Compaction is a process that moves the allocated and unallocated memory blocks so that the available or free space in the memory is contiguous.

You can use the following code snippet to compact the LOH programmatically in C#:

GCSettings.LargeObjectHeapCompactionMode = GCLargeObjectHeapCompactionMode.CompactOnce;

GC.Collect();

The SOH is compacted at the time when the garbage collection process is in execution. Contrary to SOH, the LOH is not compacted during a garbage collection cycle. This is because the cost of moving the memory blocks in the LOH is considerably higher than in the SOH. As a result, memory holes are formed in the LOH, resulting in memory fragmentation. Although you can compact the LOH programmatically, it's a recommended practice to avoid using LOH as much as possible.

Minimize Allocations

An array pool in the System.Buffers namespace represents a high-performance, and managed array pool. When arrays are frequently reused, it can minimize allocations and improve performance. In the code snippet given below, you can see how this class is defined as an abstract class in the System.Buffers namespace.

namespace System.Buffers

{

public abstract class ArrayPool<T>

{

private static readonly TlsOverPerCoreLockedStacksArrayPool<T>

s_shared = new TlsOverPerCoreLockedStacksArrayPool<T>();

public static ArrayPool<T> Shared => s_shared;

public static ArrayPool<T> Create() => new ConfigurableArrayPool<T>();

public static ArrayPool<T> Create(int maxArrayLength,

int maxArraysPerBucket) => new ConfigurableArrayPool<T>

(maxArrayLength, maxArraysPerBucket);

public abstract T[] Rent(int minimumLength);

public abstract void Return(T[] array, bool clearArray = false);

}

}

The following code snippet illustrates how you can use an ArrayPool in C#:

var array = ArrayPool<int>.Shared.Rent(1000);

//Write your usual code here

ArrayPool<int>.Shared.Return(array);

You can also create a custom array pool class as shown in the code snippet below:

public class ArrayPoolExample<T> : ArrayPool<T>

{

public override T[] Rent(int minimumLength)

{

throw new NotImplementedException();

}

public override void Return(T[] array, bool clearArray = false)

{

throw new NotImplementedException();

}

}

You can also use Span<T>, a stack-only to avoid boxing overheads and minimize allocations. Span<T> is defined as a struct in the System namespace:

public readonly ref struct Span<T>

{

internal readonly ByReference<T> _pointer;

private readonly int _length;

//Other members

}

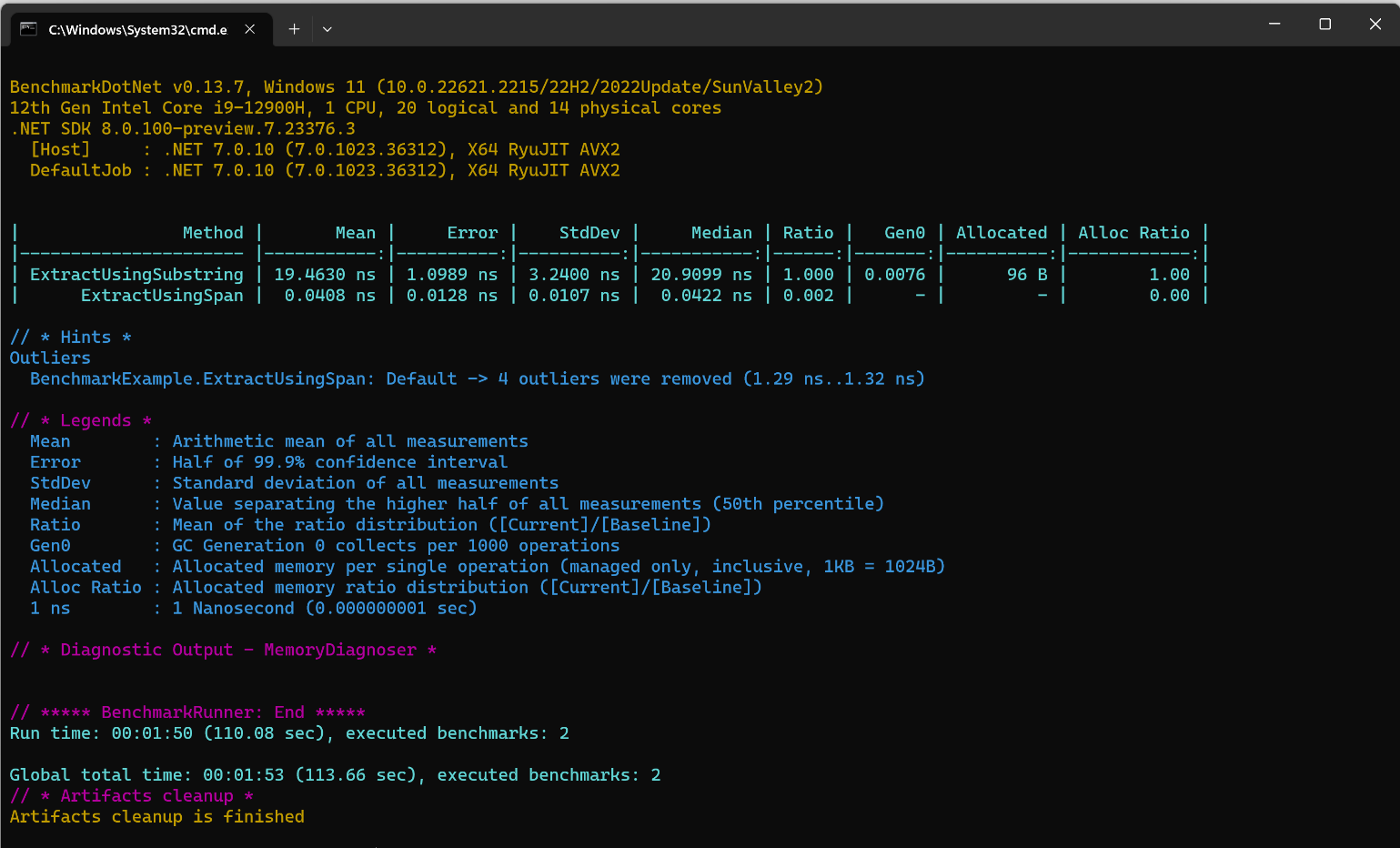

You can learn more about Span<T> from my article in the July/August 2022 issue of CODE Magazine, “Writing High-Performance Code Using Span<T> and Memory<T> in C#.” Listing 5 shows two methods for benchmarking performance: One uses the Substring method of the String class to extract strings and the other achieves the same thing using Span<T>.

Listing 5: The BenchmarkExample class

[MemoryDiagnoser]

public class BenchmarkExample

{

static string currentDateAsString = null;

[GlobalSetup]

public void GlobalSetup()

{

currentDateAsString = DateTime.Now.ToShortDateString();

}

[Benchmark(Baseline = true)]

public void ExtractUsingSubstring()

{

var day = currentDateAsString.Substring(0, 2);

var month = currentDateAsString.Substring(3, 2);

var year = currentDateAsString.Substring(6, 4);

}

[Benchmark]

public void ExtractUsingSpan()

{

ReadOnlySpan<char> currentDateSpan = currentDateAsString;

var day = currentDateSpan.Slice(0, 2);

var month = currentDateSpan.Slice(3, 2);

var year = currentDateSpan.Slice(6, 4);

}

}

Figure 5 illustrates the performance benchmarking results of the two methods.

Replace Classes with structs Wherever Possible

A class consumes more memory resources than a struct. Unlike a class, a struct doesn't have any MethodTable or ObjectHeader. Consider using a struct when replacing a class with only a few data members. Because of the differences in memory allocation of structs and classes, structs don't have the memory allocation overhead as classes. So, they're much faster than classes, mainly when working with vast data.

Consider the following class and struct:

class MySalaryClass

{

public double Basic { get; set; }

public double DA { get; set; }

public double HRA { get; set; }

}

struct MySalaryStruct

{

public double X { get; set; }

public double Y { get; set; }

public double Z { get; set; }

}

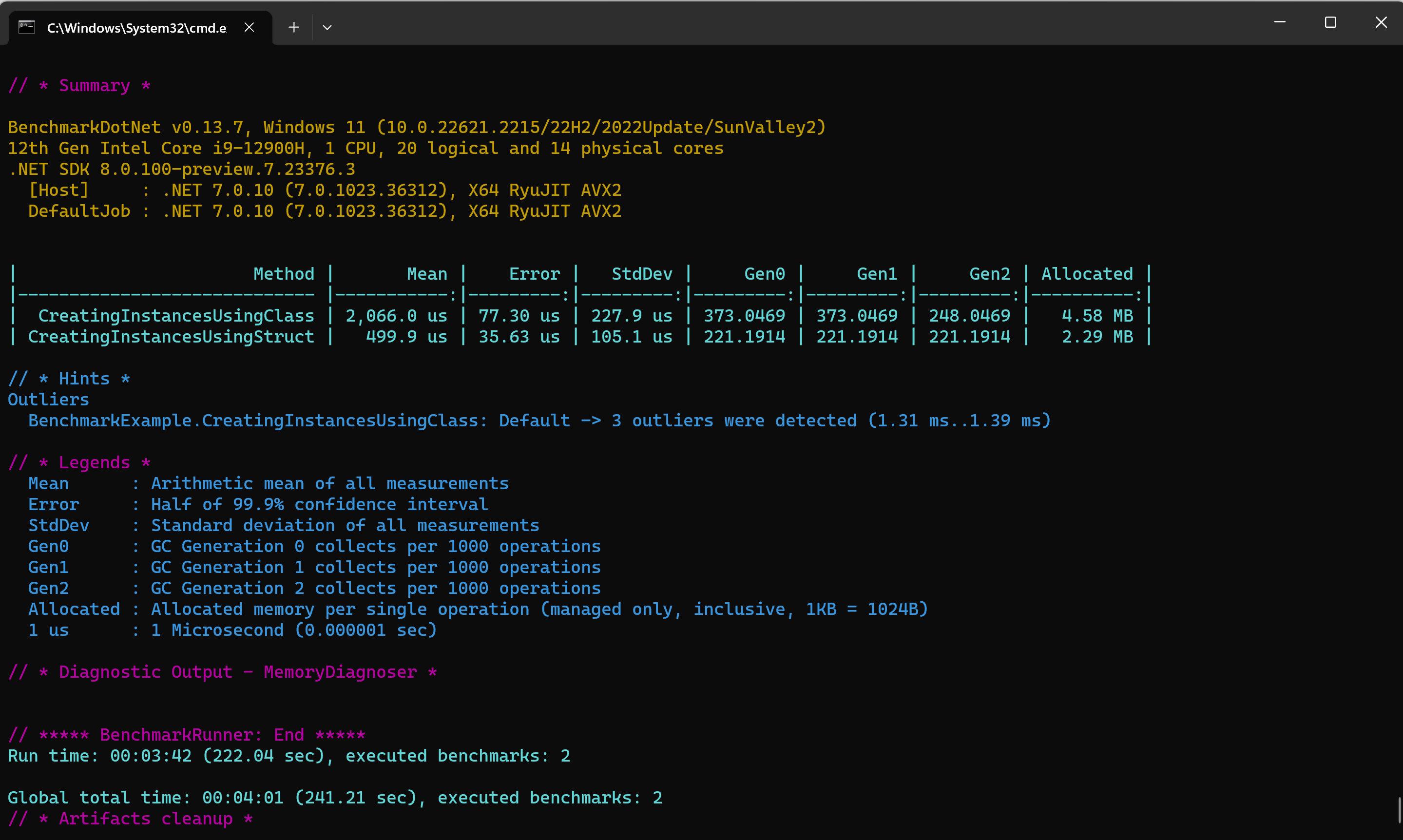

You can write the code given in Listing 6 to benchmark performance of instances of structs and classes. Figure 6 shows the benchmark results of the CreatingInstancesUsingClass and CreatingInstancesUsingStruct methods.

Listing 6: The [Benchmark] methods

const int NumberOfItems = 100000;

[Benchmark]

public void CreatingInstancesUsingClass()

{

MySalaryClass[] mySalaryClassArray = new MySalaryClass[NumberOfItems];

for (int i = 0; i < NumberOfItems; i++)

{

mySalaryClassArray[i] = new MySalaryClass();

}

}

[Benchmark]

public void CreatingInstancesUsingStruct()

{

MySalaryStruct[] mySalaryStructArray = new MySalaryStruct[NumberOfItems];

for (int i = 0; i < NumberOfItems; i++)

{

mySalaryStructArray[i] = new MySalaryStruct();

}

}

If you use an ArrayPool of structs instead of an array structs, the performance will be even better due to minimal resource overhead.

Use the “using” Keyword with IDisposable Objects

When you use the using keyword for IDisposable objects, that is, objects of classes that extend the IDisposable interface, the objects are cleaned up as soon as they go out of the scope of the using block. The following code snippet shows how you can use the using keyword:

using (var sqlConnection = new SqlConnection(connectionString))

{

// Write your usual code here to perform CRUD operations

}

// As soon as the control goes out of the scope, the Dispose() will be called automatically

When working with unmanaged resources such as database connection instances, wrap a using block inside a try block, as shown below:

try

{

using (var sqlConnection = new SqlConnection(connectionString))

{

// Write code here to connect to a database and execute commands

}

}

catch(Exception)

{

throw;

// You can write your code here to handle the exception.

}

Use Object Pooling

An object pool is a collection of pre-initialized, reusable objects that your application can use to reduce object creation costs. You can reduce processing and allocation overhead in your application using object pooling, especially in applications that are resource-intensive and performance critical. Object pooling can help you recycle objects that are expensive to create rather than creating them every time your application needs one.

You can use the CustomObjectPool class in Listing 7 to work with object pooling. It uses an instance of a HashSet<T> to store items.

Listing 7: The CustomObjectPool class for storing frequently used objects

public class CustomObjectPool<T> where T : new()

{

private readonly HashSet<T> items = new HashSet<T>();

private int ctr = 0;

private int MAX_ELEMENTS = 10;

public void Insert(T item)

{

if (ctr < MAX_ELEMENTS)

{

items.Add(item);

ctr++;

}

}

public T Get(T item)

{

if (items.Contains(item))

{

ctr--;

return item;

}

return default(T);

}

}

You can use the classes contained in the

Microsoft.Extensions.ObjectPoolpackage to create and manage your object pools.

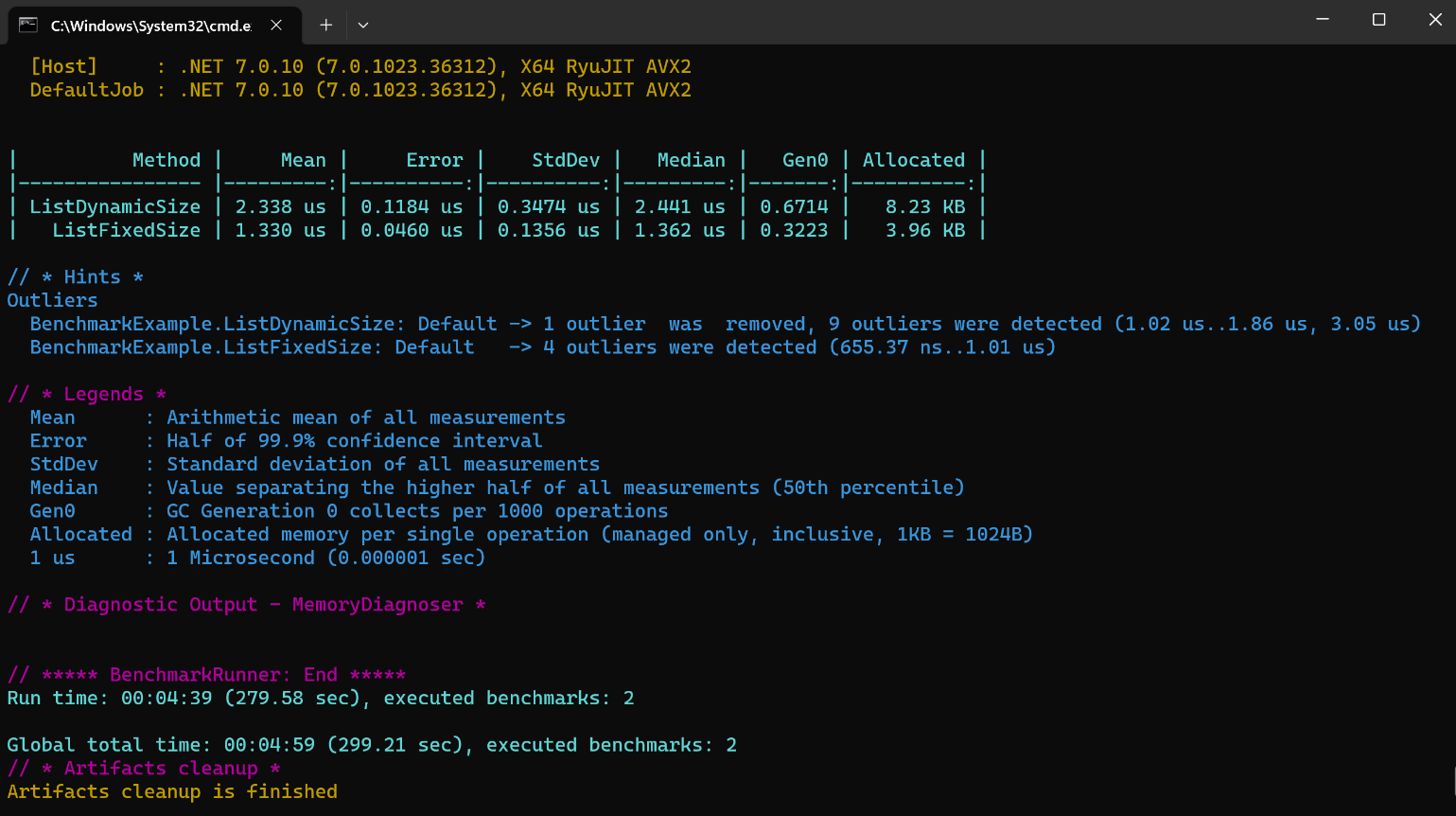

Pre-Size Data Structures

You can pre-size the data structures you use in your application for better performance. When you store data into a collection, the collection is resized multiple times. For each resize operation, an internal collection must be populated with the contents of the previous collection. You can avoid this overhead by specifying the capacity parameter when creating the collection. Listing 8 illustrates the performance benchmark of two methods: One uses a fixed sized collection and the other uses a dynamic size collection.

Listing 8: The BenchmarkExample class containing the methods to be benchmarked

[MemoryDiagnoser]

public class BenchmarkExample

{

const int SIZE = 1000;

[Benchmark]

public void ListDynamicSize()

{

List<int> list = new List<int>();

for (int i = 0; i < SIZE; i++)

{

list.Add(i);

}

}

[Benchmark]

public void ListFixedSize()

{

List<int> list = new List<int>(SIZE);

for (int i = 0; i < SIZE; i++)

{

list.Add(i);

}

}

}

Figure 7 shows how the performance benchmarks look when the preceding program is executed.

Conclusion

By adhering to the best practices discussed in this article, you can build applications that are high performant and scalable. Once you've optimized your code based on the best practices discussed here, you can benchmark performance and analyze the results.