When a team at Microsoft first conceived of SharePoint, the product team decided that the content database was the best place to store file uploads in SharePoint. Before you pull out daggers, consider that there were many advantages to this choice. You can never have a virus corrupting the server in an upload that goes into the database. No filename issues. Transaction support. Easy backups, etc. Also, believe it or not, for a certain file size (smaller the better), databases can actually offer better performance than traditional file systems for storage. In addition, the product team decided to rely heavily on GUIDs and clustered indexes inside the content database - again, a choice with positives and negatives.

Fast forward ten years, technology has changed. Databases are now much more capable of handling binaries, but on the other hand, one picture from an average smartphone is about 8 megabytes now, so documents are also much larger in size.

It is thus not a surprise that there is an increasing pressure on SharePoint to handle large file sizes. In this article, I will attempt to separate marketing and reality, and offer you, the IT professional, a true picture of what you need to do to plan for large files in SharePoint.

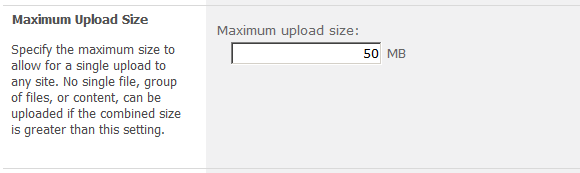

Out of the box in a plain vanilla SharePoint installation, the file size is set on a per web application level to 50MB. You can verify this by going to Manage Web Applications in Central Administration, select the Web Application, choose General Settings\General Settings and verifying your settings as shown in Figure 1.

You can change this limit to a maximum of 2GB; however, I wish it was as simple as putting a new value into this textbox. The reality is, large file uploads require you to consider numerous items at all links in the chain.

Web Server Considerations

The ability to upload large files doesn’t come without penalties. By default there are two limits to consider: a limit on the maximum request length and a limit on the execution time out.

- The default size in ASPNET is 4MB because every single upload runs as an HTTP POST request, and occupies server memory, and a server thread, thereby heavily reducing the server’s capacity to support multiple users concurrently.

- There is also a limit on the maximum execution time out to avoid slow denial of service attacks where a hacker can start a spurious upload, and upload, really really really slowly. To avoid that issue, most web applications have a default execution timeout. In order to support larger uploads, you need to change this to a higher value as well. Unfortunately, this change makes your server more vulnerable to such attacks.

You can fix both of these by tweaking the web.config of your web application. You can add the system.web\httpRuntime\@maxRequestLength attribute. Note that SharePoint already sets this value to a pretty high value by default for IIS at the system.webserver\security\requestFiltering\requestLimits\@maxAllowedContentLength attribute. If you are using SharePoint for an Internet facing site, perhaps you want to tweak this lower. One additional thing you will probably have to set at the IIS level, however, is the connection timeout on the website. You can access this by going to advanced settings of the website and changing the connection timeout.

Database Considerations

Now let’s consider the issue of what larger files do to the database. Every time a user hits CTRL-S to save a large file into SharePoint, the poor SQL Server 2008 behind a SharePoint 2010 installation has to save an entirely new version of the document - the space occupied by the previous version is lost, never reclaimed unless you consciously defragment and compact the database. A database administrator generally performs this operation during off hours, and may even require the database to be taken offline. The problem, of course, is larger documents make worse defragmentation issues - and therefore poorer performance.

The other issue that large files create is there is a size limitation on the amount of data SharePoint reads at one time. Larger documents will need more such reads, and therefore more such roundtrips to the database. Luckily, you can set a large file chunk size using the following command:

stsadm -o setproperty -pn large-file-

chunk-size -pv 1073741824

The above command sets the limit to 1GB - suitable for larger files, but has a negative impact on databases serving mostly smaller files.

Sometimes organizations also wish to store large media files in SharePoint. Unless you need SharePoint-specific features such as check-in, check out, versions, etc., I strongly encourage you to consider filestore or Azure blob storage as an alternate store mechanism. You could use SharePoint as well, but it will probably cost you more or not perform as well. What’s most interesting is that with claims-based authentication you can build a front end that feels quite well integrated with SharePoint, so the end users will not even notice it for simple streaming purposes. Put simply, Windows Streaming Services built into Windows Media Server is not something SharePoint intends to replace - if media is what you are dealing with, you should try to leverage Windows Streaming Services.

Programming Considerations

You need to know about one more limitation - the limitation built into the client object model that prevents you from uploading large files using the client object model. The client object model, when faced with a file > 3MB in size, will simply return a HTTP 400 bad request error message.

You can get around this limitation in two ways. First, you can set a higher limit on the upload size, which you can see in this code snippet.

SPWebService ws =

SPWebService.ContentService;

SPClientRequestServiceSettings

clientSettings =

ws.ClientRequestServiceSettings;

clientSettings.MaxReceivedMessageSize =

10485760;

ws.Update();

The second way is to use HTTP DAV and send raw binary across the size as shown below.

ClientContext context = new

ClientContext("http://spdevinwin");

using (FileStream fs = new

FileStream(filePath, FileMode.Open))

{

File.SaveBinaryDirect(context,

sharePointFilePath, fs, true);

}

RBS - Remote Blob Storage

How could I talk about large files and not talk about RBS? RBS stands for remote blob storage, which is the ability for you to specify at a content database by content database level, that binaries of a file larger than a specified size will end up in a remote location as specified by the remote blob storage provider of your choice. The problem is that RBS is like the cow who gives one can of milk and tips over two. While it does solve the issue of storing files in the database, it introduces a host of other considerations, including:

- Backups and disaster recovery become more complex.

- External RBS stores rarely enlist in database transactions leading to inconsistencies in the database.

- Just because you are using RBS doesn’t mean you will get better performance; you are still limited to some extent by file system structure and database queries. Also, for smaller files, you may see worse performance with RBS.

- There is a limitation of RBS storage on NAS. From the time SharePoint Server 2010 requests a blob until it receives the first byte from the NAS, no more than 20 milliseconds can pass. You can guess that this limitation means you need to invest in a very expensive NAS, which defeats the original purpose of reducing costs of storing large files by redirecting them to an external blob store. Note that DAS (direct attached storage), a.k.a. local disk, is not a supported option with SharePoint 2010 and the Filestream RBS provider.

- If you are using Remote BLOB Storage (RBS), the total volume of remote BLOB storage and metadata in the content database must not exceed 200GB.

- RBS and encryption do not mix. If your content databases are using transparent encryption, RBS is not something you can use.

- Enabling RBS on an existing database will require additional work around migrating content from SQL Server to the RBS store.

- You can set the minimum size a blob must be, before it is redirected to the external blob storage using the SPRemoteBlobStorageSettings.MinimumBlobStorage size property.

Summary

Suffice to say, you should not take a large files in SharePoint project lightly; it is not a matter of editing a text box. Usually when faced with such a project, I ask for three things:

- Average file size.

- Maximum filesize and percentage of files in top 5% size.

- Total data store size.

Also important is the usage scenarios, and security and performance requirements. Such a project usually requires careful planning and experience. I have tried to shed some light on important considerations in this article, but by far this is not the full story. I hope you found this article useful. I’ll have more juicy stuff in the next article. Until then, happy SharePointing.