Unlocking the Promise of Data-Driven Decisions: Why Microsoft Fabric Can Succeed Where Legacy Systems Failed

by Ginger Grant | First published: May 30, 2025 - Last Updated: February 12, 2026

The Data Paradox: More Data, Less Clarity

Organizations collect more data than ever before, yet many struggle to connect and transform their data into actionable business insights. This blog will discuss why companies need more data and how business can improve if they transform their data into something AI can use. I'll discuss why this failed in the past and why companies can more likely succeed now.

Big software companies push AI as the solution for business problems. AI can answer many questions, find new insights, and improve productivity, but like many tools, sometimes it works better than others. AI's capacity to make effective suggestions ultimately depends on having clean, organized, and accessible data.

AI's capacity to make effective suggestions ultimately depends on having clean, organized, and accessible data.

Consider the contrasting outcomes of recent AI implementations. Unity Technologies suffered a huge financial loss when the data that they used in their AI targeting algorithm for marketing their mobile games was not accurate, which resulted in a serious reduction in revenue. Their stock took a hit, and they are suing the company that provided them with the bad data. Companies can have problems with their own data as well. Incorrect data on what to do when self-driving cars encounter a traffic cone caused GM to exit the self-driving car industry. They lost their license to operate in San Francisco as a result. GM canceled its self-driving cars project after people started disabling them by placing traffic cones on the hood. I see Waymo vehicles driving around nearly every day with no one sitting in the driver's seat, so they were able to make AI work for them. One thing that is obvious, not all AI projects are successful, and there is no guarantee that it will help your business.

One older method that has improved performance is data-driven decision-making. Data has been driving company decision-making even before 2002, when Moneyball described the impact of data on baseball player selection. Moneyball is a best-selling example showing the bright shining goal of how, you too, can become a winner just by analyzing data.

The trick organizations should use for good data-driven decision-making is connecting, cleaning up, and organizing the data they have into information that can be used to make those decisions. Good data is required for nearly everything, including developing AI, and it has been shown to improve company performance. The question is, how do you make an improved analysis of data happen? After all, organizations tend to have a lot of different applications that generate and track data. Organizations collect data in everything from Salesforce to their accounting software to Excel. Often, organizations need to join collected data from several sources, so they have a holistic view.

Past Approaches to Collecting Data and Why They Failed

For years, organizations have been looking for data systems to join all the data together to be able to provide one version of the truth. Executives have been presented with numbers from various spreadsheets all claiming to present an accurate picture of the performance of the organization, and many times the numbers were not the same. Which one was accurate, and which was generated by a person trying to make an argument for why they should get a larger bonus?

When companies needed to purchase and maintain their own hardware, storage was expensive. Data warehousing projects were often not successful. Tools that were popular years ago used proprietary methods to transform data and, more often than not, were used to orchestrate complex SQL processes. Little thought was given to methodologies. Sometimes the processes used to move data were brittle, and when they broke, those processes were difficult to troubleshoot or restart. Past failures soured organizations on implementing new processes for a while. After all, they tried that before. What's the difference now?

Microsoft Fabric Does a Better Job at Connecting Data

Microsoft looked to solve the problems of failed data centralization of past solutions. Part of the issue was the tooling, but not all of it. Another reason centralized data systems of the past failed was that little thought and research were employed to develop methodologies that were architected to be successful.

Microsoft's research into the process and development of new tools paid off. Now companies have, in part, settled on a process and SQL and Spark, a library created to iteratively process data in memory, to transform their data so they can find one version of the truth. The race is on now to finally deliver the promise companies have been chasing for a while—making intelligent decisions using information from organized internal data. Visualization tools have improved a lot in the last 10 years as well, making data analysis faster and more accessible as people can literally see what is going on. Microsoft went one step further and decided they would also fix the storage issue for this data, so no matter where it started, it could end up in one place.

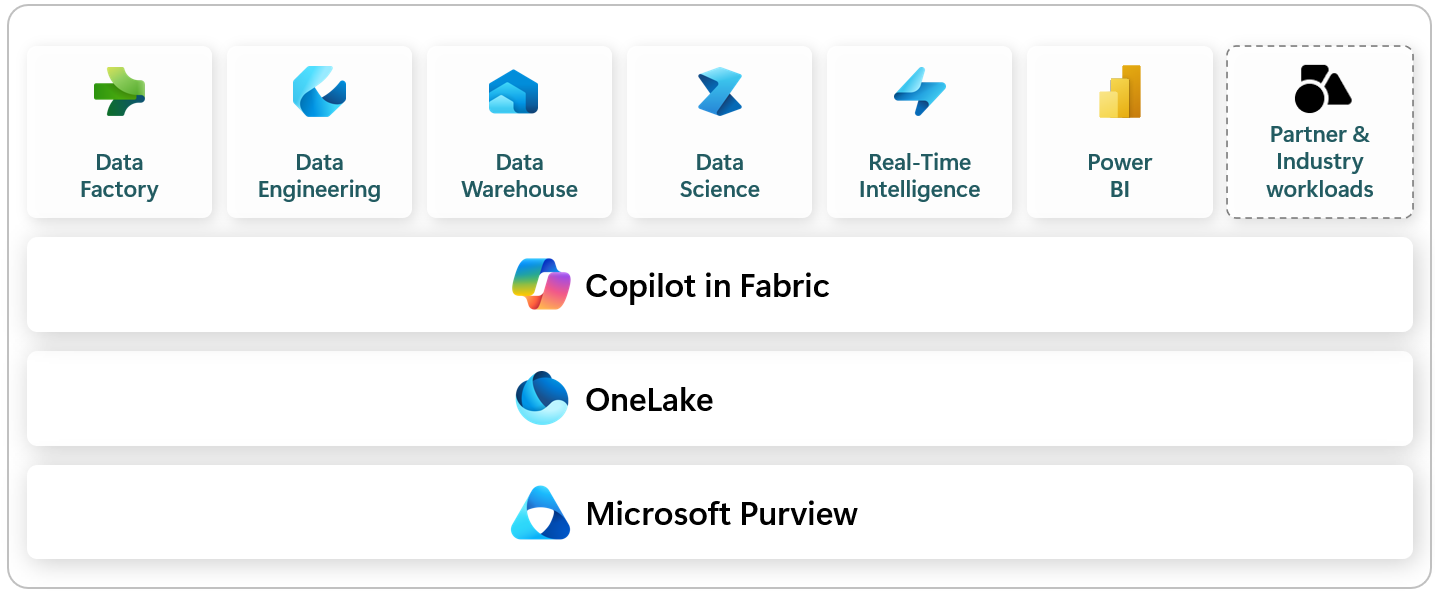

Microsoft combined products, data storage, and process research with the power of its Azure cloud to release Microsoft Fabric in November 2023.

Fabric is not a single product, but it has a lot of different components, including operational pipelines, lakehouses, warehouses, and notebooks, to name a few. Fabric brings these together to finally answer that elusive question about what information your data can provide about your business.

Microsoft knows that many organizations will have staff with a lot of knowledge about the data used in the business, but those staff may not have many technical skills. Fabric includes a lot of low-code tools to help users in organizations use Fabric without being very technical. If you have IT people who want to code the data transformation and analysis elements using the fastest tools possible, organizations can do that, too. At the end of the day, using Microsoft Fabric will let managers see their data in pretty Power BI reports that can dynamically update as it becomes available. And, of course, people can access the data in Microsoft Excel. Only now the data in Excel is secured and refreshed automatically.

Microsoft Fabric Embraces Open Standards and Interoperability

Microsoft has done a big turnaround from the past. Not only has Microsoft embraced open-source coding languages and libraries like Python and PySpark, but they are also actively working with other providers to include data stored in their products within Fabric. Does your company have data stored in AWS or Google Big Data? No problem: you can access it in Fabric with a link, which Microsoft calls a shortcut. Do you have a data warehouse already created in Snowflake? No problem: you can access that in Fabric too and even write to it so that the data is available immediately within Snowflake using Iceberg technology. Microsoft even announced it is looking to include data from its database rival, Oracle, via a shortcut, so companies can continue to use Oracle and access their data in Fabric. Describing what you can do with Fabric will take a while. I'll write future articles that will detail what you can do, and more importantly, I'll make the case about why your organization should use Fabric as your go-to for information, not just data.