When Database Alternatives Make Business Sense: A Microsoft Fabric Perspective

by Ginger Grant | First published: July 31, 2025 - Last Updated: February 12, 2026

You've likely built a career around database-centric architecture. The database landscape is shifting dramatically. Where databases once handled gigs of transactional data, your teams might now be wrestling with terabytes of mixed structured and unstructured data. Past solutions may be showing strain and might require more maintenance as they handle diverse data sources. You might wonder whether databases are still the right foundation for your organization's changing data needs.

Microsoft Fabric

Microsoft Fabric offers a shift from database-centric to analytics-centric data architecture that might be better for your organization's data. Within Microsoft Fabric, you have the option to take advantage of data structures designed for analytics, lakehouses, and warehouses with a combination of advanced data compression and open-source data storage formats by using a data lakehouse or a warehouse. Both lakehouses and warehouses can store petabytes of data with minimal maintenance required. As the data is stored in the cloud, you don't need to worry about backup, and the data is logged, so that you automatically have backups. With advances in storage applications that Microsoft Fabric has implemented, you may find that you don't need to store data using a database.

What is replacing the database as the place to store data? An open-source file system called Parquet was designed to store and process data which was created for Hadoop and enhanced by Databricks. The files are compressed by columns, in a file structure called parquet. Databricks improves upon this file structure and adds the ability to log data changes and add a single index on a file. Databricks' improved version is called delta, which adds logging and commands to Parquet files. The improvements made queries work even better when using Delta Parquet. This is the file storage that Microsoft is using in Fabric. All the data is stored as Delta parquet files. This file structure was designed to use different languages to update the data and query the data. Within Fabric, you can use T-SQL—the language Microsoft uses for SQL Server—to query Delta parquet files. In Fabric, storage has been separated from compute, and you have the ability to purchase as much storage as you need to give you petabyte-scale data lakehouses or warehouses if you want them. The storage solution technology is optimized for analysis.

Access and Analysis

One of Fabric's most innovative features is its ability to create AI-powered chatbots that can answer questions about your data in natural language. Rather than writing complex SQL queries, business users can simply ask questions like ‘What were our top-selling products last quarter?’ and receive immediate answers from their data. Microsoft wanted to support the new Fabric data structures of lakehouses and warehouses as SQL Server, meaning that the data can be accessed to be optimally used in Power BI reports, Excel, or using T-SQL queries or stored procedures. Microsoft even made sure the database application used for SQL Server, SQL Server Management Studio, can connect to Fabric warehouses and lakehouses so that people can use the database tools they have used for years in SQL Server. This means your teams can store petabytes of data in open formats (Delta Parquet) while maintaining familiar interfaces (T-SQL, SSMS) that don't require a lot of retraining. For developers who want to organize the database, Spark is also supported so that data can be manipulated by Python, R, or Spark SQL. As the data is stored in Delta Parquet files, it cannot be tuned with many indexes like databases. Queries perform well because the data is compressed and the compute is scaled up or down to meet the needs of the users.

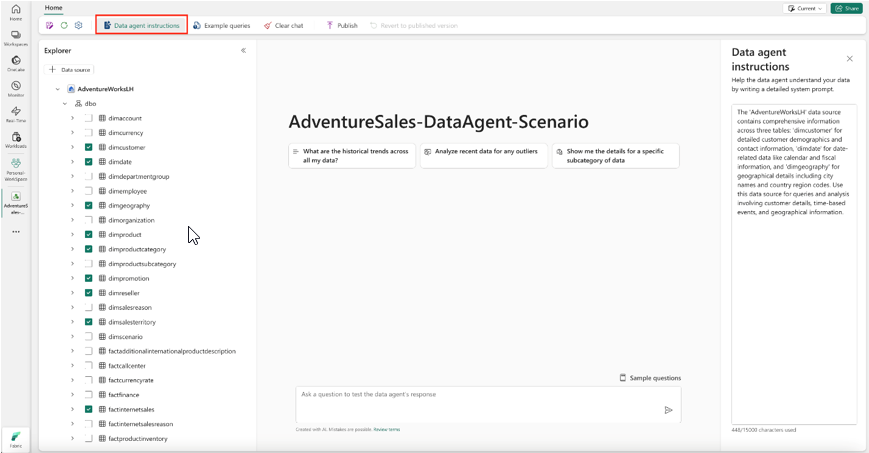

You could also create a large language model so that you can ask questions of your data with minimal effort. Clicking on the items you want will include them in the agent. After that, you can start using the chat interface to ask questions about your data. If you find hallucinations or errors in the questions that AI is returning, you can fix them by providing answers to common questions.

The ability to create your own chatbot from your data is a feature that provides access to data that is stored inside of Fabric in a lakehouse or a warehouse.

If you are doing analytics, storing data outside of a database makes economic sense too, as you can create dev, test, and production versions inside of Fabric as part of the existing license structure, whereas if you create a database, you have to pay for each instance.

The Case for a Database

There are reasons other than analysis where you may want to store your data. If it is important to read and write data as fast as possible, then data storage optimized for analysis is not the right choice. Applications are the most common scenario where the speed of reads and writes matters. Data storage designed for analysis does not find the ability to write fast to be a criteria, but application developers do not want to create a situation where people have to wait for a screen because the data has to write. If you are not doing analysis but instead are trying to insert data from an application, the tuning for analytics logic used in the lakehouse and warehouse may not be the optimal solution for entering data and supporting the application. A database may be a better choice, as you may need to tune the data for entry.

Microsoft has included a SQL Server database that can be added to Fabric for applications requiring faster reads and writes. To reduce maintenance overhead, they've incorporated AI-powered query tuning that automatically optimizes performance in real-time. This database is designed to be easy to monitor, and users don't have to navigate complex additional pricing models. The integrated SQL Server provides a framework for applications that need a database to operate.

Sorting and analyzing data is an important part of understanding everything in an organization. While you can continue to use a database, storing the data in files may prove to be a cheaper solution, which is easier to manage as the storage is optimized for analysis.