With ever-larger Web applications being built to service very large numbers of simultaneous users pounding away at Web sites, the issue of scaling applications beyond a single machine is important for Web application developers and network administrators.

While hardware capabilities seem to be increasing to the point that high-powered single machines can handle tremendous loads, there will always be those apps that push beyond a single machine. In addition, for many administrators and IT planners, it's often not good enough to say that a server can handle x number of users. They want redundancy, backup and overflow support, so a Web server or hardware failure or an unexpected surge of visitors doesn't cripple the corporate Web site. In this article, Rick discusses the issues of scalability and how load balancing services can help provide redundancy and extra horsepower to large Web sites.

When building Web applications that have the potential to ‘go big’, scalability should be on the top of the list of features. There are many things that can be done to scale a Web application, starting with a smart application design that maximizes the hardware it runs on, proper tuning, and smart site layouts that minimize traffic. With today's multi-processor hardware and Windows 2000's ability to use up to 16 processors (in the not-yet-released DataCenter Server; 8 in Advanced Server), a single machine can serve a tremendous number of backend hits. However, some applications eventually reach a point where a single machine is just not enough, regardless of how much hardware you throw at it. Applications of this scope also need backup and redundancy that requires multiple machines for piece of mind. In this article, I'll discuss one solution to scalability, using the Microsoft Network Load Balancing Service that comes with Windows 2000 Advanced Server and above. This built-in tool provides an easy mechanism for spreading TCP/IP traffic over multiple machines relatively easily.

Windows 2000 includes a number of very different features for load balancing:

- Cluster Service - The Cluster Service is used for load balancing application servers and is used primarily to provide redundant backups. You see Cluster Services implemented for SQL Server to provide multi-machine support and replication features.

- COM+ Load Balancing - COM+ Load Balancing allows COM components deployed as MTS components to be installed on multiple machines, balanced through a central server that decides which machine will invoke each component. This feature has been discussed a bit in the technical computer press, but was pulled at the last minute before Windows 2000 shipped. It's now scheduled to be released with Data Center Server later this year.

- Network Load Balancing - The focus of this article is Network Load Balancing (NLB), which provides IP-based load balancing for services such as HTTP/HTTPS, FTP, SMTP, and so on. In this scenario, a single ‘virtual’ IP address handles incoming network traffic and balances it across a cluster of machines.

In the past, there have been a number of products that have provided IP-based load balancing, such as Resonate Central Dispatch, F5's Big IP, and Cisco's Local Redirector. In addition, there are pure hardware solutions, such as routers that provide round robin DNS services. Router solutions tend to be ‘dumb’ in that they simply change IP addresses for any hit that comes in. Software tools tend to be smart, using a machine polling mechanism to see which servers are available and how loaded they are. Some newer routers provide both the routing hardware as well as load balancing features in their firmware. All of these solutions work well and have proven themselves in production environments. Unfortunately, many of them are very expensive and hard to install and administer.

Network Load Balancing in Windows 2000 Advanced Server (and higher) is the new kid on the block, and promises to bring down the cost of load balancing into the affordable range for companies in the non-Fortune 500 set. Keep in mind, though, that the Windows Network Load Balancing service doesn't provide all of the bells and whistles of the other tools. For example, Resonate provides dynamic rebalancing of hits based on server load, live graphical status reports and administration, and routing of URLs to specific machines. Like many Windows services, the Network Load Balancing Service is bare-bones, but it has the key features needed to take advantage of load balancing quickly and inexpensively. Many of the older tools were very expensive because they fall squarely into the Enterprise domain, where big dollars are usually paid for system management software. Many of these run into tens of thousands of dollars for only a few load balanced machines. On the other hand, the Network Load Balancing Service ships with Windows 2000 Advanced Server and above, which makes it affordable for smaller organizations.

Farming the Web

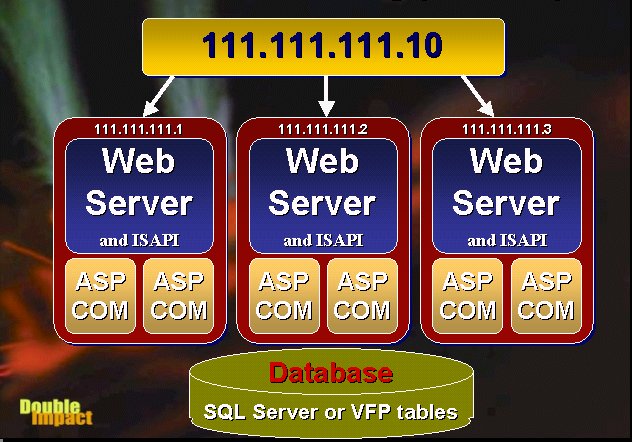

The concept behind Network Load Balancing is simple: You have a ‘virtual’ IP address that is configured on all the servers that are participating in the load balancing ‘cluster’ (a loose term that's unrelated to the Cluster Service mentioned above). When a request is made on this virtual IP, a network driver service intercepts the request and re-routes it to one of the machines in the load balancing cluster, based on rules that you can configure for each machine. Load balancing is provided at the protocol level, which allows any TCP/IP-based service to be included. Network Load Balancing is Microsoft's term for this technology. This is also known as a Web Server Farm, or IP Dispatching.

Network Load Balancing (NLB) facilitates the creation of a Web Server Farm. A Web Server Farm is a redundant cluster of several Web servers serving a single IP address. The most common scenario is that each of the servers is identically configured, running the Web server and local Web applications. With IIS, this might be custom ISAPI extensions or applications built with Active Server Pages. The key is redundancy in addition to load balancing. If any machine in the cluster goes down, the virtual IP address will re-balance the incoming requests to the available servers in the cluster. The servers in the cluster need to be able to communicate with each other to exchange information about their loads, and to allow basic checks to see if a server is down.

Each server in the cluster is self-contained, which means it should be able to function without any other server in the cluster, with the exception of the database (which is not part of the NLB cluster). This means that each server must be configured separately to run the Web server as well as any needed Web server applications. If you're running a static site, all HTML files and images must be replicated across servers. If you are using ASP, those ASP pages must also be replicated. Source control programs like Visual SourceSafe can make this process relatively painless by allowing you to deploy updated files of a project (in Visual Interdev or FrontPage for example) to multiple locations simultaneously.

If you have COM components as part of your Web application, things get more complicated, since the COM objects must be installed and configured on each of the servers. This isn't as simple as copying the file, but will also require re-registering the servers, plus potentially moving additional support files (DLLs, configuration files, and non-SQL data files). If you're accessing databases, you also need to configure the appropriate DSNs to allow each server to access the data source. In addition, if you're using In-Process components, you'll have to shut down the Web server to unload them. You'll likely want to set up some scripts or batch files to automatically perform these tasks, pulling update files from a central deployment server. You can use the Windows Scripting Host (.vbs or .js files) along with the IIS Admin objects to automate much of this. This is often tricky and can be a major job, especially if you have a large number of cluster nodes and updates are frequent. Strict operational rules are often required to make this process reliable. In general, the update process is likely to occur one machine at a time so that the Web site can continue to run while the changes and updates are made. In this scenario only one machine is taken down, updated with the latest version of the application, then put back online. Then, the next machine in the cluster receives the same treatment.

Since multiple redundant machines are involved in a cluster, you'll want to have your data in a central location that can be accessed from all the cluster machines. It's likely that you will use a full client/server database like SQL Server in a Web farm environment, but you can also use file-based data access like Visual FoxPro tables if those tables are kept in a central location accessed over a LAN connection.

Note that in heavy load balancing scenarios running a SQL backend, the database can become the performance bottleneck! You need to think about what happens when you overload the database, which is running on a single box. Max out that box, and you have problems that are much harder to address than Web load balancing. At that point, you need to think about splitting your databases so that some data can be written to other machines. For redundancy, you can use the Microsoft Cluster Service to monitor and synchronize a backup server that can take over in case of primary server failure.

Network Load Balancing is very efficient and can provide close to 2:1 performance improvement for each machine added into the cluster. There is some overhead involved, but I didn't notice it. My performance tests with Microsoft's Web Application Stress tool showed that each machine added close to 100% of its standalone performance to the cluster.

You may notice that with this level of redundancy, increasing your load balancing capability becomes simply a matter of adding additional machines to the cluster, giving you practically unlimited application scalability (as long as the database allows it).

Getting started with Network Load Balancing

Network load balancing in Windows 2000 is fairly easy to set up and run, assuming that you can manage to decipher the horrible documentation in the online help. In this section, I'll take you through a configuration scenario that hopefully will make your installation and configuration much easier, by highlighting important aspects of installation and startup.

Let's start by discussing what you need in order to use NLB. You'll need at least two machines running

Windows 2000 Advanced Server or better. You'll need at least one network card in each machine. You can also use multiple network cards ? one for the cluster communication and one for the dedicated IP address for all directly accessed resources. For testing, it's a good idea to have another machine that can run a Web stress testing tool to let you see how the cluster works under load.

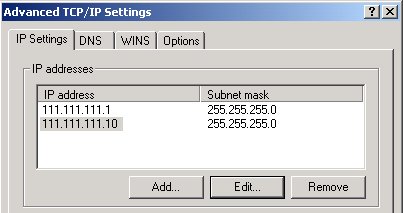

I'm going to use two machines here to demonstrate how to set up and run NLB. Assume the IP addresses for these machines are 111.111.111**.1** and 111.111.111**.2**. I'm going to set up NLB on a ‘virtual IP’ (NLB calls this the ‘primary IP address’) at 111.111.111**.10**. In order to set up NLB, every machine in the cluster must set up this IP address in addition to its dedicated machine IP address(es). To do so, right click on Network Neighborhood on the desktop, click Properties, then Internet Protocol (TCP/IP). The machine must have at least one fixed IP address in order for NLB to work ? DHCP clients with automatically assigned addresses will not work, so if you're using DHCP make sure to add at least one physical IP address to your machine configuration. Once you have a primary IP address for your machine, click on the Advanced button and add 111.111.111.10 as a new IP address. Make sure that subnet masks are the same on all of these IP addresses (255.255.255.0). Figure 2 shows what you should see in the IP display dialog.

Note that even though 111.111.111.10 is a virtual IP address, you can tie domain names to it with DNS. So, your master domain name such as www.yoursite.com would point to this virtual IP address in the DNS record.

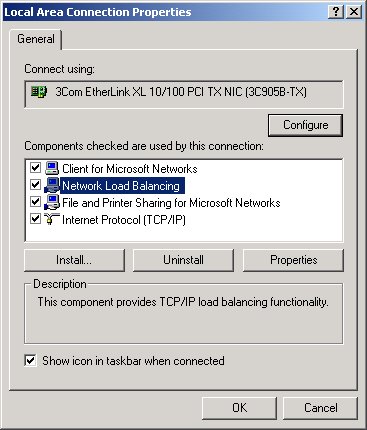

You now have an IP address that the virtual IP can bind to. Go back out to the Local Area Connection Properties and notice the Network Load Balancing option in the list of network services. Check the checkbox and click the entry to bring up the service properties.

Cluster parameters

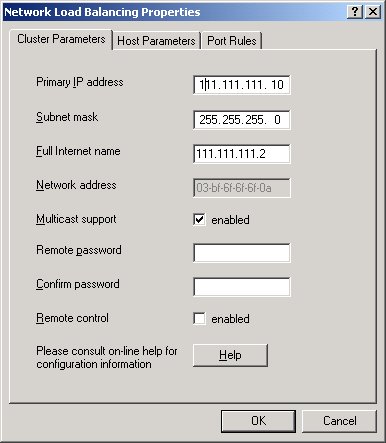

The first page of this multi-tab dialog (see Figure 4) shows you the cluster parameters. Here you put information about the cluster, such as the virtual IP address, subnet mask, and whether to use multicast messages. There are also additional options that allow you control the cluster remotely via the command line tools that the service provides. Note that this applet has a number of user interface bugs and quirks that make using it a little less than optimal, so be sure you look at the values before moving on to the next page or clicking OK. In addition, the documentation and help file are very scattered, making it hard to find helpful information. The best help is the ‘?’ help from the dialog's control box. Drop the question mark onto individual fields for the best documentation on individual items in each of the dialogs.

NLB calls the virtual IP the Primary IP address, which is very confusing to say the least, since primary IP can mean primary IP of your system or of the cluster. Virtual IP is the term most commonly used in other load balancing packages, and I think it describes the concept much better. This Primary IP is the cluster's IP address, which will be used to access all the sites in the cluster. Public DNS entries such as www.yoursite.com will be bound to this ‘virtual’ IP address. Set this IP address to the new IP we added in Figure 2 for all of our cluster machines ? in this case 111.111.111.10 ? and adjust the subnet mask to 255.255.255.0.

The full Internet name is used only for remote administration and is used as an identifier for the machine. If you're using a single network adapter you'll want to enable multicast support to allow the network card to handle traffic both for the cluster and dedicated IP address.

Host Parameters

The Host parameters (see Figure 5) configure the cluster machine's native IP settings and affect how the cluster loads. The Dedicated IP Address for is the main physical IP address for the machine that is used to access the machine without going through the cluster. This IP address can be accessed directly, or NLB can use it for routing virtual IP traffic. In other words, you can access the machine via 111.111.111.1 or 111.111.111.10. Only the latter runs through the Load Balancing Cluster.

Another important parameter is the Priority setting, which doubles as a unique ID for each server in the cluster. Each server must have a unique ID, and the server with the lowest priority value (which is really the highest priority!) handles all of the default network traffic that is not specifically configured in the port rules. I'm referring to default network traffic, such as files passed between servers and standard network messages that don't fall within the range of rules applied in the port rules. It doesn't affect the configured ports, which are handled on an equal (presumably on a first come, first served) basis between the cluster machines.

The server with lowest ID handles the default network traffic serviced by the virtual IP. If the first server in the cluster fails, the one with the next higher priority will become the controller. Note that actual load balancing percentage settings can be configured separately in the Port Rules tab, and are not affected by the priority setting. The important thing is that each server gets a unique ID, since the cluster manager reports on the servers using the priority ID (for example, when converging IP addresses on startup).

You can dynamically add and delete servers from the cluster with the Active flag, keeping the other parameters of the server intact.

Port Rules

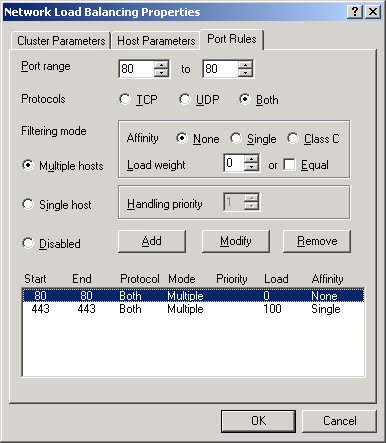

To configure individual machines in the cluster, you can configure port rules for each of the servers. The rules determine how the cluster balances the load among machines, with rules for percentage-based balancing as well as for specific ports being sent to specific machines.

Each machine can be configured to service a specific range of IP ports. By default, NLB serves all ports, and this default setting is probably sufficient if you're servicing specific traffic like Web access. Web traffic typically comes in on port 80 for standard HTTP, with secure HTTPS coming in on port 443. The port range is handy, because it could allow you to indirectly control which protocols are load balanced. It also can route all secure traffic to a specific server, so that you will have to install a secure certificate on only one of the machines.

In addition to limiting ports, you can also configure how the cluster affinity works. The affinity setting control whether incoming requests from a client are always bound to the same cluster node. There are three types of affinity settings:

- None - Setting the affinity to None means the next available node in the cluster will service the hit. Requests are never routed to a specific machine in the cluster.

- Single - Every request for a particular client is routed to the same IP address providing a ‘sticky’ server.

- Class C - Like Single, this causes a group of Class C IP Addresses to be routed to the same server on every hit.

Affinity may be required for specific state-keeping requirements, in order to track users through the site. For example, Active Server Page Sessions are machine specific, and to use ASP Sessions, users must be hitting the same server each time. Affinity can also help performance in some scenarios where users are tracked through a site, because of the inherent caching that occurs in the Web applications. For example, SSL/HTTPS connections are much slower if they are always rebuilt from scratch rather than caching some of the certificate information provided to the clients.

On the other hand, the use of affinity can cause a bit of overhead in the routing of requests, because the IP routing manager may have to wait for the machine in question to become available if busy. Affinity is useful when you have stateful operations that benefit from caching. In very high volume environments, scalability will be better without affinity.

Each server has to be assigned a handling priority, which is given in percentages and should combine to 100% for the entire cluster, although this is not a requirement. Optionally you can specify Equal, which tries to split the load evenly between all of the servers in the cluster.

The typical scenario for load balancing is that you configure each server in the cluster with a single rule, which will be identical, except for the port rule percentages. But, you can create multiple rules and have each handled by any combination of machines in the cluster. By default, rules are set up for multiple hosts, which means that the rule is handled by multiple machines in the cluster. You can also configure a rule to be served by a single host or group of single hosts. For example, you could set up a server to be part of the general cluster, but also configure it to be the single host in the cluster to serve SSL requests on port 443.

It's very important, however, that you set up every server with exactly the same port rules - only varying the load factor - or your cluster will not work! For example, Figure 6 shows port rules for port 80 and 443, since I want to have this server service all of the SSL requests. The other machine in the cluster should not service port 443, but you still need to configure the port rule for port 443, with a load factor of 0%. This was a bit confusing at first and is not described correctly in the documentation, so make sure you remember this when setting up your own servers.

This can cause serious headaches when you're testing - I suggest that you start out by setting up your servers with the exact same port rules, to make sure that everything works before experimenting with custom port rules.

Administering the Service

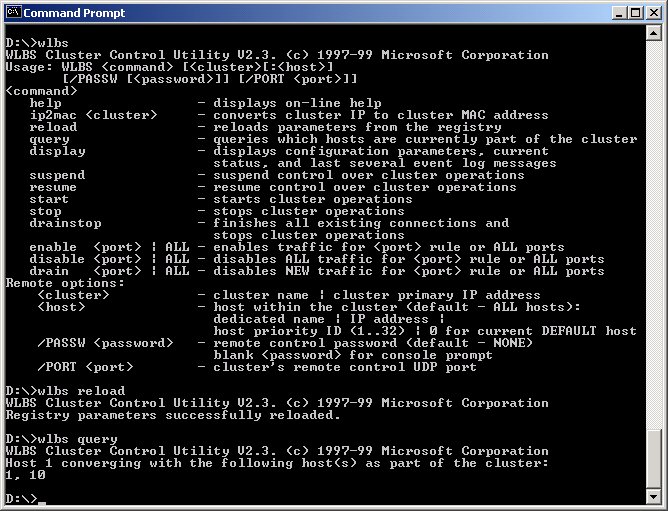

The Network Load Balancing service also comes with a command line utility: WLBS.exe. This utility allows you to view and refresh settings made in the dialogs above in the live cluster. The WLBS program lets you refresh the settings of the cluster and individual machines, view current settings, get machines to converge, and administer settings on machines remotely. To get an idea of the commands available in this archaic interface, simply run WLBS from the System Command Prompt to see all of the options.

The two most important commands to remember are Query and Reload. Reload takes the settings made in the dialogs and immediately reloads them from the registry into the currently running cluster server. The documentation states that each cluster machine will occasionally refresh the new settings automatically, but in my tests this was not happening, so I used a manual Reload.

Once you've reloaded settings, you have to re-converge the cluster ? fancy talk for making the cluster see your changes. After reloading, I noticed that the cluster often stopped dead. Issuing a WLBS Query re-converged the cluster and fired it back up. Query also shows you all the Priority IDs of the servers currently in the pool. If you disconnect one of the servers, you'll see its ID removed within 10 seconds or so. Put it back in, and it will show up and again become part of the pool. This is useful for troubleshooting and for seeing which servers are available. You can run this on any of the cluster server machines. In fact, if you have problems, you should do this to make sure that all of the machines can ‘see’ each other over the network (although this won't guarantee that the cluster node is operational).

Note that even if the cluster is not operational (for example if your port rules don't match), you will see the cluster with the query command. There apparently is no way to see exactly whether the cluster is functional, and no error information is provided, so getting the service up and running can take some trial and error.

Putting it all together

Once you've configured your servers in the cluster, it's time to see how the service works.

For my first setup, I configured two servers with no affinity and equal load weight. One of the machines is a PII 400, while the other is an old notebook P266. With equal balancing, both machines should get the same amount of traffic, even though the older box is much less capable.

I also set up a separate machine running Microsoft's Web Application Stress Tool (WAST - for more info see “Load Testing Web Applications using Microsoft's Web Application Stress Tool”) to simulate a large number of users hitting the Web store application on my development servers. The app is running a Visual FoxPro backend (using several pooled COM server instances on each machine) against a SQL Server database. A separate machine makes this process more realistic, but you could also run this tool on one of the cluster nodes, if necessary. I configured WAST for a continuous load of 300 simultaneous users without any delays between requests. Since there are no delays between requests, this is a load much closer to 1500-2000 actual users, since users typically take some time between requests before moving on to the next link. In my previous tests, the breaking point of the application was right around the 200-user mark on the single PII 400. I figure that with the load balancing, I should be able to get a 50% gain, or around 300 users total.

I ran the test for an hour to get an idea about load and stability of the service. The results were as expected, and I was able to service the 300 users easily. The old P266 machine was loaded very heavily and running very close to 100%, while the PII400 was running at just below 50% load. I then rebalanced the handling priorities to 70% on the PII400 and 30% on the P266, and both machines then ran at close to 80% load. I was able to actually bump the user count to 350 and still get good performance, with

dynamic results returning in under 1.5 seconds.

When I started adding additional users, I noticed that the actual load percentages on the servers weren't getting any worse, but performance was slowing down to 2.5 - 4 seconds per request. After some checking, I found that the SQL Server backend was starting to lag under the load. A check of the SQL box revealed that this machine was running very close to 90% load continuously, mostly in the SQL Server process. So, while clients were pounding away on the SQL Server, they just sat there idle (not taking up any CPU time) while waiting for the queries to return. Hence, the slower response, but still lighter CPU usage.

My test bed obviously doesn't consist of high-end server machines. You can expect much better performance on faster hardware, both for the actual Web application and VFP backend, as well as the SQL Server. But, this kind of testing with a stress testing tool is crucial for finding bottlenecks in a Web application. It's not always easy to pinpoint the bottleneck, as your weakest link (in this case the SQL Server on a relatively low-power machine) can drag down the entire application.

Finally, I decided to test a failure scenario by pulling the plug on one of my servers. With both cluster machines running, one of them went dead and, after 10 seconds, all requests ended up going to the remaining machine, providing the anticipated redundancy. No requests were lost, although the single machine still working was getting very overloaded and response times dropped considerably.

Load Balancing your Web applications

Running an application on more than one machine introduces some challenges into the design and layout of the application. If your Web app is not 100% stateless, you will run into potential problems with resources required on specific machines. You'll want to think about this as you design your Web applications, rather than retrofitting at the last minute.

For example, if you're using a Visual FoxPro backend and you're accessing local FoxPro data in any way, that data must be shared on a central network drive in order for all of the cluster servers to access those files. This includes application ‘system’ files. In Web Connection, this would mean the log file and the session management table, which would have to be shared on a network drive somewhere. It can also involve things like registry and INI file settings that may be used for configuration of applications. When you build these types of configurations, try to do it in such a way that the configuration information can be loaded and maintained from a central store or can be replicated easily on all machines.

If you're using Active Server Pages, you'll have to remember that ASP's useful Session and Application objects will not work across multiple machines. This means that you either have to run the cluster with Single Affinity to keep clients coming back to the same machine, or you have to come up with a different session management scheme that stores session data in a more central data store, such as a database. I personally believe in the latter because most eCommerce applications already require databases to track users for traffic and site statistics. Adding Session state to these files tends to be trivial, without adding significant overhead.

Finally, load balancing can allow you to scale applications with multiple machines relatively easily. To add more load handling capabilities, just add more machines. However, remember that when you build applications this way, your weakest link can bring down the entire load balancing scheme. If your SQL backend is maxed out, no amount of additional machines in the load balancing cluster will improve performance. The SQL backend is your weakest link, and the only way to wring better performance out of it is to upgrade hardware or split databases into separate servers. Other Load Balancing software like Resonate and Local Redirector also can experience bottlenecks in the IP manager machine that routes IP requests. NLBS is much better in this respect than many other Load Balancing solutions that require a central manager node, since NLBS uses every machine in the cluster as a manager.

Cocked and loaded? Not yet…

Windows 2000's Network Load Balancing Service is a welcome addition to the scalability services provided by the operating system. It provides basic load balancing features that are easy to set up and run, once you fight through the bad documentation. I hope this document helped to make this process easier. The service is quick and easy to configure, and transparently works behind the scenes without any administrative fuss. The service works well, once configured correctly, and performance and stability are excellent. I did not have any problems in several WAST tests that each ran over a 24 hour period.

But, several minor bugs in the administrative interface, the antiquated command line administration interface, the fact that changes don't take immediately and can cause the cluster to stop responding, and the bad documentation make this product feel like a 1.0 release in need of an update. Ironically, I couldn't even find much additional information on the Microsoft Web site or in the knowledgebase. It seems that Microsoft is not really advertising or pushing this technology at this time. But, aside from the quirky interface, the service was reliable and none of the issues described are showstoppers that should keep you from using this powerful tool.