SRT Solutions worked with a health care provider in designing and developing software to determine the efficacy of computerized interventions for underage drinkers. Teenage patients use the software deployed on a touch-screen tablet PC. They participate in surveys and navigate a faux social media site with pages designed to lead them toward healthy decisions. Developers at SRT Solutions produced the software using several modern technologies and tools.

We broke the project into two parts: a generic, extensible survey engine and the deliverable application that utilized the survey engine. We built the survey engine using YAML to define the questions. YAML, a superset of JSON, is a markup language designed for readability. We chose YAML because we knew our customers would be writing the survey. The customer found YAML to be easier to edit and read than other choices like XML or JSON. We used IronRuby to customize skipping and branching rules in the survey specification. WPF, Prism and dynamic features in C# 4.0 were used to design the UI layer using the MVVM pattern. The back-end included a SQL Server database with a local cache database for offline usage.

We learned a lot both from a technical perspective and a customer relations perspective throughout the project. Although not all lessons learned were positive, we expect to grow from our mistakes. What follows is a synopsis of what went well and what did not.

Things that Went Well

Tight Integration with the Customer

The customer provided us with a general idea of their needs and wishes but it was our job to translate that into a metaphor in the application. We did not start the project with a set of fixed requirements. Instead, we approached the customer with prototypes of our ideas up-front based on some loosely defined goals. We insisted that we speak with the customer on a daily basis to mitigate the risk of developing the wrong solution.

The customer and our team participated in a telephone-based “stand-up meeting.” The meetings used the standard “stand-up” agenda: What did we do yesterday? What will we do today? What is blocking us? The meetings rarely lasted more than five minutes, but they kept us connected with our progress and their needs.

Additionally, we often met in person to show new ideas, ask questions, or brainstorm content in addition to stand-up meetings. Two members of the customer’s team often worked in our office, even if there was nothing specific on which we needed to collaborate.

We also held bi-weekly “end of iteration” meetings, sometimes called “show and tell.” These meetings included nearly everyone from both teams. The teams used these meetings to communicate progress and solve disconnects.

Two members of the customer’s team often worked in our office, even if there was nothing specific on which we needed to collaborate.

Our working relationship was collaborative, with the customer and development team feeling comfortable to challenge one another’s positions. We fixed errors more quickly; therefore, we reduced the time spent developing misguided features.

The customer created and changed requirements through the project’s last week. We were informed of every decision to add, remove or modify the system in near-real time by keeping in constant communication. We adapted as the customer’s needs changed.

Positive communication did not erase all problems. For example, the customer had difficulty imagining the ultimate (final product) behavior of mock-ups and prototypes. They appreciated working models, and our tight feedback loop allowed us to generate many partially completed subsystems without wasting time programming off-target features. When we were off-target, the customer let us know and we adapted quickly.

Constant communication with the customer created a successful product.

Our tight integration with the customer was critical to the project’s success. The customer originally approached us with a vaguely focused vision. The final product, however, solidly interpreted the customer’s needs. Constant communication with the customer created a successful product. Certainly, decreased or lesser connections would have produced an inferior product.

Extensible Engine

The application included a set of surveys and a set of interactive activity screens. The surveys asked questions and stored the results. The activity screens mimicked concepts familiar to popular social networking websites. The customer produced all survey content and activities while we handled activity interactions. We realized early in the project that the activity screens were easily modeled after survey questions. The activities consisted of customized views and behaviors but internally they were nothing more than multiple choice questions.

We initially searched for survey engines that met our criteria but could not find anything useable. Because nothing met our needs, we built a brand new survey engine designed for extensibility. We designed the view engine using concepts found in Prism, which allowed us to easily swap out different view components based on question name or question type. The engine also used Unity, an “Inversion of Control” (IoC) container which could swap customizable ViewModels for behavior.

The application developer provided styles and behaviors matching the survey script. For example, a survey question asking about your language preference might be defined with the following YAML specification:

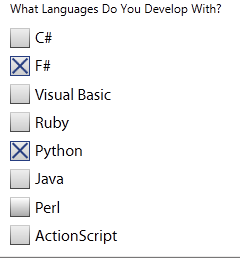

Type: Checkbox

Name: WhatLanguage

Title: What Languages Do You Develop With?

Options:

- { Value: 1, Title: C# }

- { Value: 2, Title: F# }

- { Value: 3, Title: Visual Basic }

- { Value: 4, Title: Ruby }

- { Value: 5, Title: Python }

- { Value: 6, Title: Java }

- { Value: 7, Title: Perl }

- { Value: 8, Title: ActionScript }

The survey engine rendered the question with a very simple style. See Figure 1 for details.

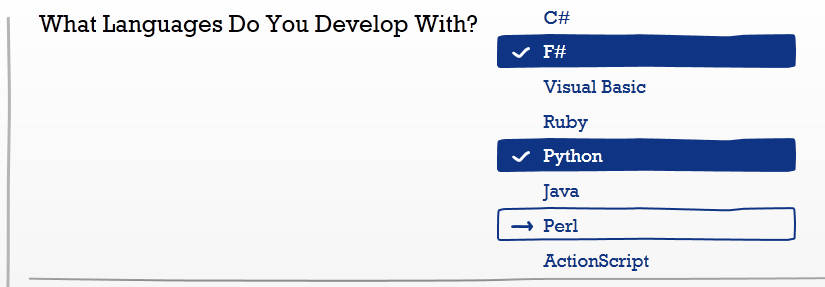

The default styling was extremely boring, so the designer defined default views to swap in at runtime. Figure 2 shows the same question, rendered with a more attractive style.

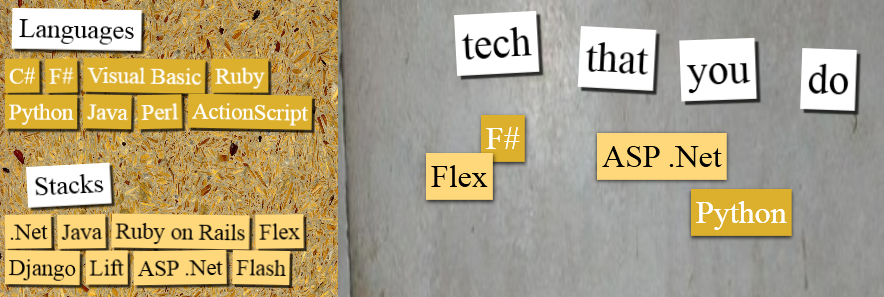

We used the same engine for the interactive activities. To accomplish this, the developer registered a custom behavior and a custom view. This transformed the question into something that no longer resembled a multiple choice question. For example, we created an activity mimicking refrigerator magnet poetry. We created the effect by swapping out the default behavior (ViewModel) and the default view. On a multi-touch tablet, gestures make the different answers stick to the refrigerator, move around, rotate and change sizes. See Figure 3 for details.

The survey engine was extremely successful in enabling our application to be designed in parts. The content was separated from the presentation, which was separated from the behavior. The customer was responsible for modifying the content through a script file, but the developer and designer were responsible for making the interaction work with the content in a compelling way. The engine was responsible for the persistence and base behavior, so the developer was free to focus on the overrides necessary for the interaction. The extensibility of the survey engine reduced the amount of code and provided flexibility. This allowed everyone to focus on specific tasks to produce an engaging product.

IronRuby for Branch Logic

The customer required the survey to include branch logic and skip logic. Branch logic conditionally branches the user to a new question or page based on the results of existing answers and logical rules. Skip logic is similar, but allows individual questions to be skipped or excluded from the set of questions based on the results of previously answered questions. The branch and skip rules were specified in the YAML with the questions.

The branching and skipping feature would have been significantly more costly to write if we had not embedded Ruby into our engine.

To achieve this, we embedded IronRuby in our survey engine. We avoided costly, bug-prone parsing/evaluation logic in favor of translating rules into Ruby code. For example, a skip rule might look like this:

Skip: unless ((PreviousAnswer)) >= 2.

The value of the “Skip” parameter was passed through a variable substitution routine and the code was then translated to a Ruby function and executed (assuming that the value of PreviousAnswer is 7):

return unless if 7 > 2

Branch logic worked in a similar way. The rule was added in the YAML:

Branch: DESIRED_PAGE if ((PreviousAnswer)) >= 3 &&

((OtherAnswer)) == true

The value of the “Branch” parameter was passed through a variable substitution routine and into a Ruby function to read:

return :DESIRED_PAGE if 7 >= 3 && true == true

By using this approach, significantly complex logic was inserted into the survey for skip and branch logic. We did not need to parse the logic or figure out how to execute it because the Ruby engine did all the heavy lifting. We were able to utilize built-in capabilities of Ruby such as methods on the String class as in this example, where a question is skipped if it does not include a keyword:

Skip: unless ‘((PreviousAnswer))’.Include? ‘Some Text’

The branching and skipping feature would have been significantly more costly to write if we had not embedded Ruby into our engine. Ruby also added richness to the customizability of our product that would have been very difficult to achieve otherwise.

Developer/Designer Workflow

The visual design of the application was stunning. We worked with a talented designer who added a creative dimension to our application. He was familiar with the Adobe tool suite (which was used for initial mockups) as well as the Microsoft Expression suite, but he was not a developer.

We had two options for the designer-developer workflow. In the first possible workflow, the designer would mold the application’s look using his authoring tools and pass assets “over the wall” to the developer integrating the design. In the second workflow, the designer modifies the project directly based on his mock-ups from other tools.

We chose the second, more integrated approach. The designer worked in the same room as the rest of the team. He was able to ask questions, discuss ideas and collaborate with all of his stakeholders.

The designer primarily modified our solution using Expression Blend. We maintained a strict MVVM pattern with all our views, so when the designer modified the XAML, he rarely worried about breaking the behavior - all behavior was separated into a ViewModel and data-bound from the View. Expression Blend enabled this productive workflow because Blend can open existing Visual Studio (developer) projects.

Our general workflow pattern:

This workflow was smooth. Productivity was high for both the developers and the designer. The developers avoided costly design integration time due to our decision to follow the integrated developer/designer workflow. Our chosen workflow allowed us to do what we do best: program behavior. It also allowed the designer do what he does best: create beautiful user interfaces.

Our chosen workflow allowed us to do what we do best: program behavior. It also allowed the designer do what he does best: create beautiful user interfaces.

Paired Programming

During this project we extensively used pair programming, a practice encouraging shared space and roles. As used in our project, one programmer types and communicates ideas while a second reviews code and offers advice. The two use one workstation and frequently switch roles.

This was our first extensive use of paired programming, and members of the team found it very useful during the project’s initial stages. It was especially useful when establishing the survey engine’s infrastructure and rules. We would spend a few minutes collaborating on design ideas, decide how to test the feature, and then write the code. We were very productive despite misconceptions assuming pairs only work half as fast. When a task was simple or did not require creative thought, we split up and developed separately. Ultimately, we shared a strong knowledge of the code and could equally address questions or challenges.

We were very productive despite misconceptions assuming pairs only work half as fast.

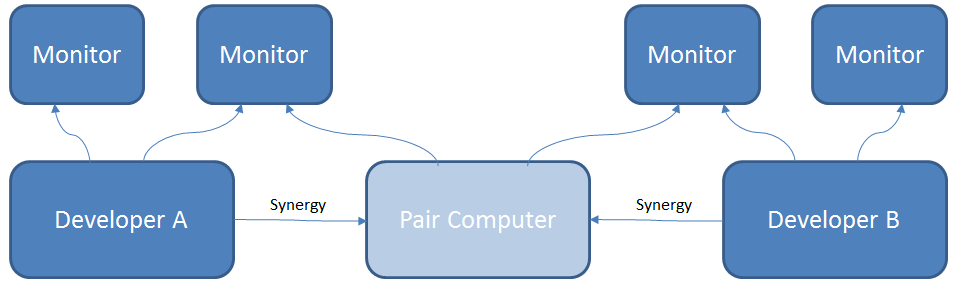

Our specific setup worked well when both developers worked in the same room. Each developer had his own computer with two monitors. A third system was rigged up as the “pairing computer.” The two monitors were cabled together in a configuration that allowed easy switching between “pair mode” and “single mode.” To get control of the “pairing computer,” we used a free tool called Synergy, which allowed us to share our keyboard and mouse. See Figure 4 for details.

Things That Did Not Go Well

Customer Lack of Conceptualization

The project used a faux social media site because we - and the client - recognized teenagers are comfortable with the social media concept. Determining specific activities that mapped to existing ideas, however, was much more difficult. The customer requested engaging activities that could temper the subject matter of making difficult but healthy decisions. The greatest challenge came when some of our client’s representatives were unfamiliar with the potential social media idioms and concepts. We facilitated brainstorming sessions, but without working examples, our client could seldom accurately conceptualize the proposed ideas. Working prototypes generated productive discussions, but budget and schedule limitations presented difficulty in delivering a sufficient number of prototypes to represent the proposed ideas.

Maintaining “Ready to Ship”

We tried to maintain an application that was always “ready to ship” after each two-week iteration. The customer is protected because they can stop whenever they want and have something to show for their money. Conversely, if the money or time runs out and the solution is not workable, something gets sacrificed (profit, stability or deadline).

We used a Scrum-based process to manage the project with an iteration frequency of two weeks in addition to desiring an application that was always “ready to ship.” Our end of iteration meetings demonstrated our progress. We asked the customer what they wanted to see for the next iteration during these meetings. The customer almost always chose front-end stories from the many “user stories” on the table. The front-end stories often contained a large amount of creative content and the customer wanted to understand how it was going to play out.

The customer almost always chose front-end stories from the many “user stories” on the table.

Unfortunately, as each iteration completed, more and more infrastructure stories were pushed to later iterations. We produced many beautiful user interactions, but we weren’t storing any data on the server! We were not able to maintain a “ready to ship” solution without the infrastructure stories being implemented. The infrastructure, although invisible from a customer perspective, turned out to be rather complex. We ended up churning more than we had expected on the back-end tasks and we were not “ready to ship” by the time we got to the end of the project from a cash-flow perspective. Reaching a workable solution required several extra iterations, but this extra effort exhausted our budget.

The project would have been more profitable if we had insisted that the infrastructure be developed early to maintain a “ready to ship” state. We needed to balance the customer’s needs to visualize the product early with everyone’s needs to have a product that was workable from the front to the back. We could have broken down the front-end stories. Smaller pieces would have allowed us to give the customer a glimpse into the front-end component but also implement infrastructure pieces. From there, time and money would ideally permit finer-grained stories to hone the front-end and infrastructure in future iterations.

Testing QA with the Customer

When the contract was initiated, it was stated the client would participate in the project’s quality assurance (QA) testing. While this process saved the client money, it also caused them unnecessary concern. QA involved stressing the branching and skip logic in the scripts. The customer effectively identified and executed the necessary test cases. This aspect of client participation in QA was very positive. Unfortunately, this customer - like most clients - was unfamiliar with the typical problems found in a product’s initial phases.

We quickly fixed these bugs, but because they had struggled with the early version, the client never completely trusted this feature; they remembered its instability and avoided it.

The typical crashes, bugs, and slow performance issues so common to programmers were not forgotten despite a quality final product. For example, an early version of the data collection had numerous bugs due to inconsistencies between LINQ to SQL and LINQ to Objects. We quickly fixed these bugs, but because they had struggled with the early version, the client never completely trusted this feature; they remembered its instability and avoided it. We fixed the problems before shipping a quality product, but we finished knowing the customer would have been happier if their involvement in early testing had been been avoided. Internal QA testing is preferable. If significant problems are fixed before the customer sees the product, clients will have more confidence in both the developers and the final product.

Difficulties with the Microsoft Sync Framework

We had several data synchronization requirements to implement on this application. We could not rely on a consistent Internet connection, so the tablets had to work in an offline mode. The tablets also needed to synchronize data for a particular participant and not the entire database. At first glance, it looked like Microsoft’s Sync Framework was the perfect technology fit. We ran into several issues that caused us to abandon the Sync Framework and write our own custom solution.

The first problem we encountered was the Sync Framework wanted to synchronize the entire database table. There was no easy way set a filter on the synchronization to only push/pull data for one participant. The customer had a security concern that data from every participant would be stored locally on each tablet. Even though our data was not particularly large, synchronizing the entire table took too long. We found a couple solutions for filtering with the Sync Framework. One option was to abandon the generated code and create our own SyncAgent with the necessary filtering parameters. We found this to be too much work. Another solution was to modify the generated SQL to add the filtering. This worked for pulling down data, but we never managed to figure out how to push the data back up to the server.

The second problem was the Sync Framework seemed to be very difficult to understand when problems occurred. We encountered issues randomly with obscure exceptions that gave us no insight to the actual problem. We ultimately lost confidence in the reliability of the solution; this required us to create our own custom solution.

The Sync Framework solution consumed enough of our time that we had difficulty knowing when to cut our losses. We would have finished before we actually abandoned the Sync Framework had we started with a custom solution from the beginning. It is a difficult decision to know when to change directions. We will certainly think about cutting our losses earlier in future projects if we find ourselves churning in the same way.

Integration Tests vs. Unit Tests

The majority of the logic in our system was well specified by unit tests. Our continuous integration server was quick to tell us when anyone broke any of the tests. We wrote unit tests for the following areas of the code base:

- ViewModels which contained the majority of the UI behavior

- The model which handled the management of the question data

- The repository which maintained a cached (local) database in synchronization with a server database

We still ran into issues with our tested code even though we had so many tests in place. The biggest source of trouble came from our repository. We implemented our synchronized repository with LINQ to SQL both on the server and on our local copy of the data. We mocked out the database with a set of collections which had the same data structure as the database for our unit tests. The unit tests used LINQ to Objects while the live repository used LINQ to SQL. Unfortunately, LINQ to Objects works differently than LINQ to SQL and the differences are subtle.

In retrospect, a suite of integration tests in addition to the unit tests would have found many of these problems.

For example, the SQL server has column size limits. Objects do not. Unit tests that would have failed against SQL did not fail under test. We also had a situation where the LINQ to Objects test implementation returned a slightly different data type than the LINQ to SQL implementation (List vs Array) which caused very subtle issues when trying to remove items. Again, the tests passed against LINQ to Objects where the LINQ to SQL analog failed.

We knew ahead of time that the differences existed, but we did nothing to prepare for it. The subtle differences did not show up when we ran the software ourselves. The problems appeared when the user started beta-testing our software. The customer reported a new high-severity bug every day for the first several days of testing.

In retrospect, a suite of integration tests in addition to the unit tests would have found many of these problems. We could have written tests that worked against a transient, embedded database (like SQL Server Express). We could have also written more functional, UI-level smoke tests. Our product would have yielded less critical, end-of-project bugs had we considered other testing forms beside unit tests.

Scrum in Bug Fix Mode

Scrum, our standard process, didn’t seem optimal during this project’s final, bug-fixing phase. Scrum defines a set of project management roles and processes. It divides a project into small (2-4 week) iterations called sprints. Tasks for each sprint are selected from a prioritized backlog, and commonly only these tasks receive attention during a sprint. Occasionally, a customer requests a mid-sprint change of priorities; while this can be done, time constraints demand returning a lower priority task to the backlog for later consideration.

It is unusual - and unproductive - for a customer to request many new tasks during a sprint cycle. Scrum helps prevent such unfocused task management because straying from a defined plan makes the programming team less efficient. However, rigid adherence to Scrum in later project phases was less successful. There is never a defined date when feature development stops and bug fixing begins. Therefore, as later stages demand constant but unexpected bug fixing, Scrum was naturally less effective.

Scrum helps prevent such unfocused task management because straying from a defined plan makes the programming team less efficient.

The best solution may be to give each Scrum sprint some flexible time to address high priority bugs. If a non-defined task could be built into each sprint’s priority task list, the project’s early stages would remain focused but flexible. As the task backlog empties and new bugs are more numerous, it might be more productive to switch from Scrum to a bug tracking system. Any remaining features can be added to the bug tracking system’s priority list at that time.

Summary

By the time we shipped the application, the software was of high quality. Our customer remains extremely happy with the product of our collaboration. SRT Solutions gained some great experience both from a technical and a customer perspective. Some extremely creative ideas were put to pixels with this project and we are proud to have been a part of it. By focusing on this post mortem, we hope to continue doing the things we do well and improve on the things that we do not.